H2O Eval Studio

H2O.ai’s h2o_sonar is a Python package for the introspection of machine learning

models by enabling various facets of Responsible AI for both predictive and

generative models.

It incorporates methods for model explanations to understand, trust, and

ensure the fairness - bias detection & remediation, model debugging for accuracy,

privacy, and security, model assessments, and documentation.

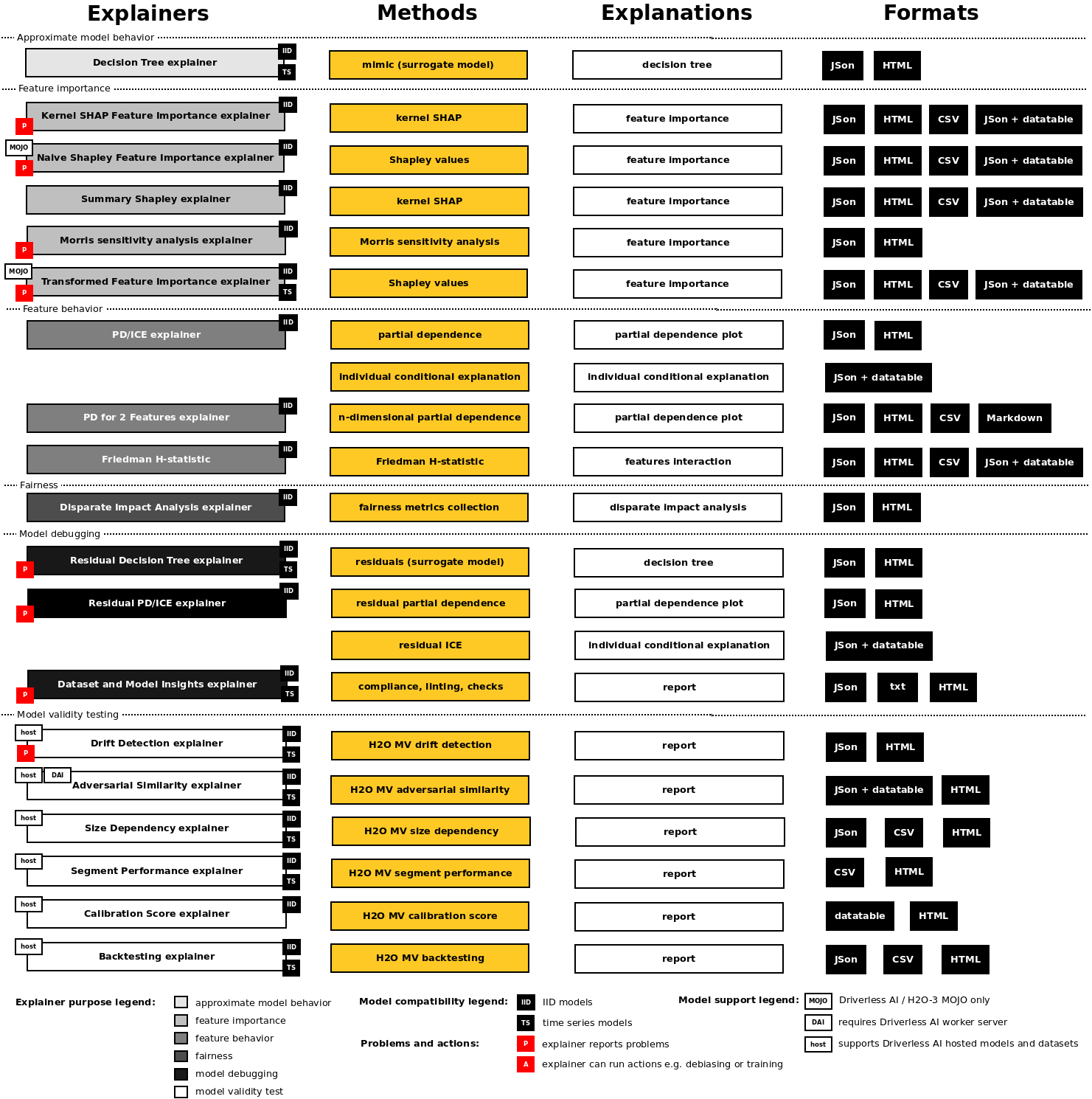

Predictive AI

This package enables a new, holistic, low-risk, human-interpretable, fair, and trustable approach to machine learning. H2O-3, scikit-learn and Driverless AI models are the first-class citizens of the package, but it will be designed to accommodate several types of Python models. Specifically the product:

Explains many types of models.

Assesses the observational fairness of many types of models.

Debugs many types of models.

Integrates with Enterprise h2oGPT for model insights and problem mitigation plans.

The functionality is available to engineers and data scientists through a Python API and Command Line Interface API.

Supported environments & Python versions:

Driverless AI MOJO runtime -

daimojo library- supports Linux only.H2O Model Validation based explainers are not available on Python 3.11 as Driverless AI client is not available for this runtime.

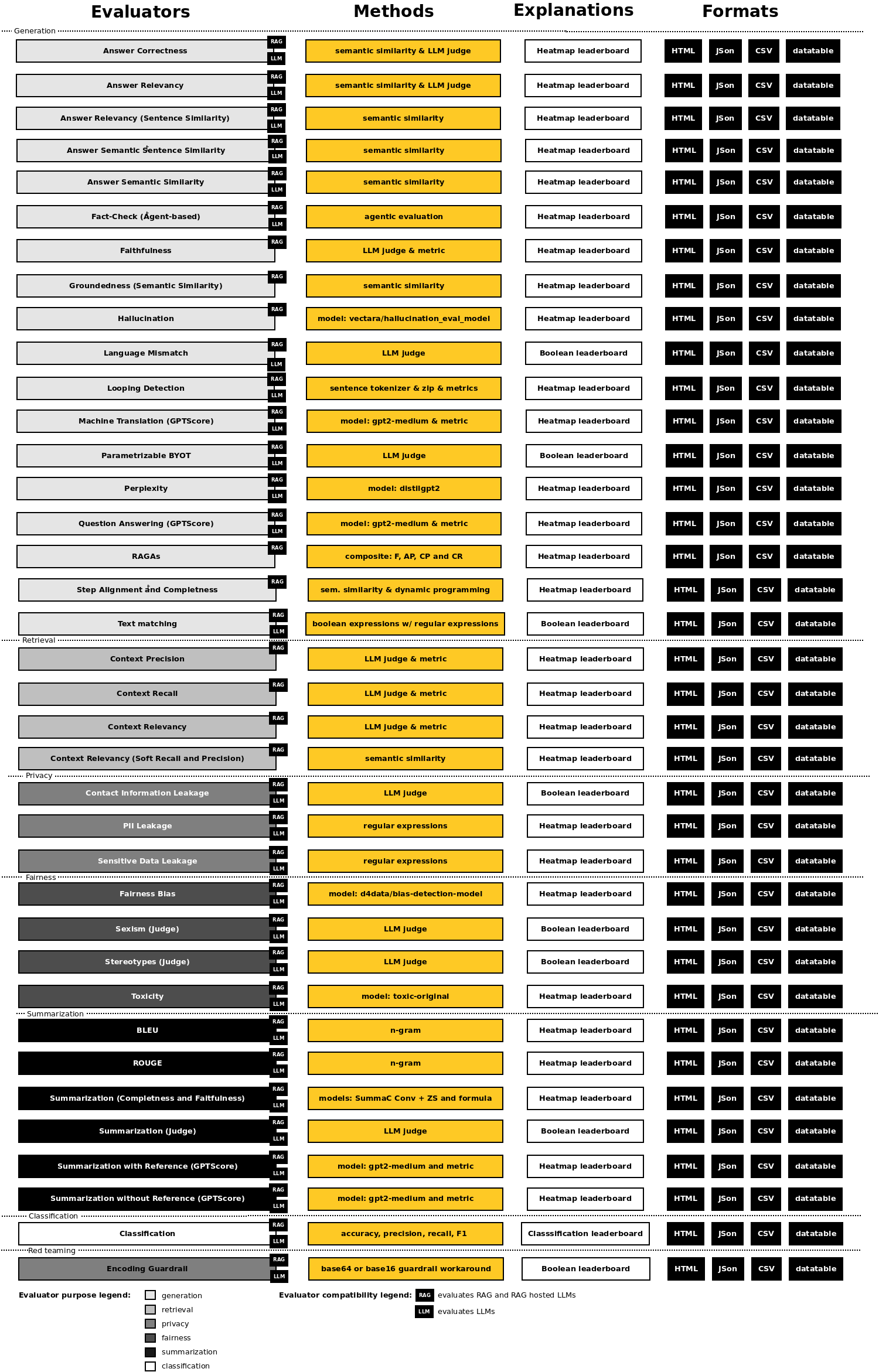

Generative AI

h2oGPTe, h2oGPT, H2O LLMOps/MLOps, OpenAI, Microsoft Azure Open AI, Amazon Bedrock, and ollama RAGs/LLMs hosts are the first-class citizens of the H2O Eval Studio.

Installation

Getting Started

Documentation

- H2O Eval Studio Documentation

- Terminology

- Library Configuration

- Predictive Models

- Interpreting Models

- Interpreting Datasets

- Interpretation Parameters

- Explainers

- Explainer Parameters

- Surrogate Decision Tree Explainer

- Residual Surrogate Decision Tree Explainer

- Shapley Summary Plot for Original Features (Naive Shapley Method) Explainer

- Shapley Values for Original Features (Kernel SHAP Method) Explainer

- Shapley Values for Original Features of MOJO Models (Naive Method) Explainer

- Shapley Values for Transformed Features of MOJO Models Explainer

- Partial Dependence/Individual Conditional Expectations (PD/ICE) Explainer

- Partial Dependence for 2 Features Explainer

- Residual Partial Dependence/Individual Conditional Expectations (PD/ICE) Explainer

- Disparate Impact Analysis (DIA) Explainer

- Morris sensitivity analysis Explainer

- Friedman’s H-statistic Explainer

- Dataset and Model Insights Explainer

- Adversarial Similarity Explainer

- Backtesting Explainer

- Calibration Score Explainer

- Drift Detection Explainer

- Segment Performance Explainer

- Size Dependency Explainer

- Generative Models

- Evaluating RAGs and LLMs

- RAG and LLM Hosts

- Test Case, Suite, Lab and LLM Dataset

- Evaluator Parameters

- Evaluators

- Answer Correctness Evaluator

- Answer Semantic Similarity Evaluator

- Answer Semantic Sentence Similarity Evaluator

- Context Relevancy Evaluator

- Context Relevancy (Soft Recall and Precision) Evaluator

- Groundedness (Semantic Similarity) Evaluator

- Hallucination Evaluator

- RAGAS Evaluator

- Text Matching Evaluator

- Context Precision Evaluator

- Fact-Check (Agent-based) Evaluator

- Faithfulness Evaluator

- Context Recall Evaluator

- Answer Relevancy Evaluator

- Answer Relevancy (Sentence Similarity) Evaluator

- PII Leakage Evaluator

- Encoding Guardrail Evaluator

- Sensitive Data Leakage Evaluator

- Toxicity Evaluator

- Fairness Bias Evaluator

- Contact Information Evaluator

- Language Mismatch (Judge) Evaluator

- Looping Detection Evaluator

- Parameterizable BYOP Evaluator

- Perplexity Evaluator

- Sexism (Judge) Evaluator

- Step Alignment and Completeness Evaluator

- Stereotypes (Judge) Evaluator

- Summarization (Completeness and Faithfulness) Evaluator

- Summarization (Judge) Evaluator

- Summarization with reference (GPTScore) Evaluator

- Summarization without reference (GPTScore) Evaluator

- BLEU Evaluator

- ROUGE Evaluator

- Classification Evaluator

- Machine Translation (GPTScore) Evaluator

- Question Answering (GPTScore) Evaluator

- BYOJ: Bring Your Own Judge

- BYOP: Bring Your Own Prompt

- Perturbations

- Perturbations Step-by-Step

- Random Character Perturbation

- Y/Z Perturbation

- Comma Perturbation

- Word Swap Perturbation

- Synonym Perturbation

- Antonym Perturbation

- Random Character Insertion Perturbation

- Random Character Deletion Perturbation

- Random Character Replacement Perturbation

- Keyboard Typos Perturbation

- OCR Error Character Perturbation

- Contextual Misinformation Perturbation

- Perturbations API

- Using Perturbations to Assess Model Robustness

- Report and Results

- Talk to Report

- Terminology

Extensibility

Examples

Python API

- H2O Eval Studio Python API

- h2o_sonar package

- Subpackages

- h2o_sonar.explainers package

- Submodules

- h2o_sonar.explainers.adversarial_similarity_explainer module

AdversarialSimilarityExplainerAdversarialSimilarityExplainer.CLASS_ONE_AND_ONLYAdversarialSimilarityExplainer.DEFAULT_DROP_COLSAdversarialSimilarityExplainer.DEFAULT_SHAPLEY_VALUESAdversarialSimilarityExplainer.PARAM_DROP_COLSAdversarialSimilarityExplainer.PARAM_SHAPLEY_VALUESAdversarialSimilarityExplainer.PARAM_WORKERAdversarialSimilarityExplainer.PLOT_TITLEAdversarialSimilarityExplainer.ResultAdversarialSimilarityExplainer.check_compatibility()AdversarialSimilarityExplainer.explain()AdversarialSimilarityExplainer.get_result()AdversarialSimilarityExplainer.setup()

- h2o_sonar.explainers.backtesting_explainer module

BacktestingExplainerBacktestingExplainer.DEFAULT_CUSTOM_DATESBacktestingExplainer.DEFAULT_FORECAST_PERIOD_UNITBacktestingExplainer.DEFAULT_NUMBER_OF_FORECAST_PERIODSBacktestingExplainer.DEFAULT_NUMBER_OF_SPLITSBacktestingExplainer.DEFAULT_NUMBER_OF_TRAINING_PERIODSBacktestingExplainer.DEFAULT_SPLIT_TYPEBacktestingExplainer.DEFAULT_TIME_COLUMNBacktestingExplainer.DEFAULT_TRAINING_PERIOD_UNITBacktestingExplainer.OPT_SPLIT_TYPE_AUTOBacktestingExplainer.OPT_SPLIT_TYPE_CUSTOMBacktestingExplainer.PARAM_CUSTOM_DATESBacktestingExplainer.PARAM_FORECAST_PERIOD_UNITBacktestingExplainer.PARAM_NUMBER_OF_FORECAST_PERIODSBacktestingExplainer.PARAM_NUMBER_OF_SPLITSBacktestingExplainer.PARAM_NUMBER_OF_TRAINING_PERIODSBacktestingExplainer.PARAM_PLOT_TYPEBacktestingExplainer.PARAM_SPLIT_TYPEBacktestingExplainer.PARAM_TIME_COLUMNBacktestingExplainer.PARAM_TRAINING_PERIOD_UNITBacktestingExplainer.PARAM_WORKERBacktestingExplainer.check_compatibility()BacktestingExplainer.explain()BacktestingExplainer.get_result()BacktestingExplainer.setup()

- h2o_sonar.explainers.calibration_score_explainer module

CalibrationScoreExplainerCalibrationScoreExplainer.COL_PROB_PREDCalibrationScoreExplainer.COL_PROB_TRUECalibrationScoreExplainer.COL_SCORECalibrationScoreExplainer.COL_TARGETCalibrationScoreExplainer.DEFAULT_BIN_STRATEGYCalibrationScoreExplainer.DEFAULT_NUMBER_OF_BINSCalibrationScoreExplainer.KEY_BRIER_SCORECalibrationScoreExplainer.KEY_CALIBRATION_CURVECalibrationScoreExplainer.KEY_CLASSES_LABELSCalibrationScoreExplainer.KEY_CLASSES_LEGENDSCalibrationScoreExplainer.KEY_DATACalibrationScoreExplainer.KEY_PLOTS_PATHSCalibrationScoreExplainer.OPT_BIN_STRATEGY_QUANTILECalibrationScoreExplainer.OPT_BIN_STRATEGY_UNIFORMCalibrationScoreExplainer.PARAM_BIN_STRATEGYCalibrationScoreExplainer.PARAM_NUMBER_OF_BINSCalibrationScoreExplainer.PARAM_WORKERCalibrationScoreExplainer.RESULT_FILE_JSONCalibrationScoreExplainer.ResultCalibrationScoreExplainer.check_compatibility()CalibrationScoreExplainer.explain()CalibrationScoreExplainer.get_result()CalibrationScoreExplainer.normalize_to_gom()CalibrationScoreExplainer.setup()

- h2o_sonar.explainers.dataset_and_model_insights_explainer module

- h2o_sonar.explainers.dia_explainer module

DiaArgsDiaExplainerDiaExplainer.PARAM_CUT_OFFDiaExplainer.PARAM_FAST_APPROXDiaExplainer.PARAM_FEATURESDiaExplainer.PARAM_FEATURE_NAMEDiaExplainer.PARAM_FEATURE_SUMMARIESDiaExplainer.PARAM_MAXIMIZE_METRICDiaExplainer.PARAM_MAX_CARDDiaExplainer.PARAM_MIN_CARDDiaExplainer.PARAM_NAMEDiaExplainer.PARAM_NUM_CARDDiaExplainer.PARAM_SAMPLE_SIZEDiaExplainer.PARAM_USE_HOLDOUT_PREDSDiaExplainer.check_compatibility()DiaExplainer.explain()DiaExplainer.get_entry_constants()DiaExplainer.get_max_metric()DiaExplainer.get_result()DiaExplainer.is_enabled()DiaExplainer.setup()

- h2o_sonar.explainers.drift_explainer module

DriftDetectionExplainerDriftDetectionExplainer.DEFAULT_DRIFT_THRESHOLDDriftDetectionExplainer.DEFAULT_DROP_COLSDriftDetectionExplainer.PARAM_DRIFT_THRESHOLDDriftDetectionExplainer.PARAM_DROP_COLSDriftDetectionExplainer.PARAM_WORKERDriftDetectionExplainer.check_compatibility()DriftDetectionExplainer.explain()DriftDetectionExplainer.get_result()DriftDetectionExplainer.setup()

- h2o_sonar.explainers.dt_surrogate_explainer module

DecisionTreeConstantsDecisionTreeConstants.CAT_ENCODING_DICTDecisionTreeConstants.CAT_ENCODING_LISTDecisionTreeConstants.COLUMN_DAI_PREDICTDecisionTreeConstants.COLUMN_DT_PATHDecisionTreeConstants.COLUMN_MODEL_PREDDecisionTreeConstants.COLUMN_ORIG_PREDDecisionTreeConstants.DEFAULT_NFOLDSDecisionTreeConstants.DEFAULT_TREE_DEPTHDecisionTreeConstants.DIR_DT_SURROGATEDecisionTreeConstants.ENC_AUTODecisionTreeConstants.ENC_ENUM_LTDDecisionTreeConstants.ENC_LEDecisionTreeConstants.ENC_ONE_HOTDecisionTreeConstants.ENC_SORTDecisionTreeConstants.FILE_DEFAULT_DETAILSDecisionTreeConstants.FILE_DEFAULT_TREEDecisionTreeConstants.FILE_DRF_VAR_IMPDecisionTreeConstants.FILE_METRICS_DTDecisionTreeConstants.FILE_METRICS_DT_MULTI_PREFIXDecisionTreeConstants.FILE_WORK_DTDecisionTreeConstants.FILE_WORK_DT_MULTI_PREFIXDecisionTreeConstants.H2O_ENCODING_NAMESDecisionTreeConstants.H2O_ENC_AUTODecisionTreeConstants.H2O_ENC_ENUM_LTDDecisionTreeConstants.H2O_ENC_LEDecisionTreeConstants.H2O_ENC_ONE_HOTDecisionTreeConstants.H2O_ENC_SORTDecisionTreeConstants.KEY_LABELS_MAPDecisionTreeConstants.SEED

DecisionTreeSurrogateExplainerDecisionTreeSurrogateExplainer.PARAM_CAT_ENCODINGDecisionTreeSurrogateExplainer.PARAM_DEBUG_RESIDUALSDecisionTreeSurrogateExplainer.PARAM_DEBUG_RESIDUALS_CLASSDecisionTreeSurrogateExplainer.PARAM_DT_DEPTHDecisionTreeSurrogateExplainer.PARAM_NFOLDSDecisionTreeSurrogateExplainer.PARAM_QBIN_COLSDecisionTreeSurrogateExplainer.PARAM_QBIN_COUNTDecisionTreeSurrogateExplainer.check_compatibility()DecisionTreeSurrogateExplainer.explain()DecisionTreeSurrogateExplainer.explain_local()DecisionTreeSurrogateExplainer.get_result()DecisionTreeSurrogateExplainer.is_enabled()DecisionTreeSurrogateExplainer.setup()

- h2o_sonar.explainers.fi_kernel_shap_explainer module

KernelShapFeatureImportanceExplainerKernelShapFeatureImportanceExplainer.OPT_BIN_1_CLASSKernelShapFeatureImportanceExplainer.PARAM_FAST_APPROXKernelShapFeatureImportanceExplainer.PARAM_L1KernelShapFeatureImportanceExplainer.PARAM_LEAKAGE_WARN_THRESHOLDKernelShapFeatureImportanceExplainer.PARAM_MAXRUNTIMEKernelShapFeatureImportanceExplainer.PARAM_NSAMPLEKernelShapFeatureImportanceExplainer.check_compatibility()KernelShapFeatureImportanceExplainer.explain()KernelShapFeatureImportanceExplainer.explain_problems()KernelShapFeatureImportanceExplainer.get_result()KernelShapFeatureImportanceExplainer.setup()

- h2o_sonar.explainers.fi_naive_shapley_explainer module

NaiveShapleyMojoFeatureImportanceExplainerNaiveShapleyMojoFeatureImportanceExplainer.DEFAULT_FAST_APPROXNaiveShapleyMojoFeatureImportanceExplainer.OPT_BIN_1_CLASSNaiveShapleyMojoFeatureImportanceExplainer.PARAM_FAST_APPROXNaiveShapleyMojoFeatureImportanceExplainer.PARAM_LEAKAGE_WARN_THRESHOLDNaiveShapleyMojoFeatureImportanceExplainer.PARAM_SAMPLE_SIZENaiveShapleyMojoFeatureImportanceExplainer.PREFIX_CONTRIBNaiveShapleyMojoFeatureImportanceExplainer.check_compatibility()NaiveShapleyMojoFeatureImportanceExplainer.explain()NaiveShapleyMojoFeatureImportanceExplainer.explain_local()NaiveShapleyMojoFeatureImportanceExplainer.explain_problems()NaiveShapleyMojoFeatureImportanceExplainer.get_result()NaiveShapleyMojoFeatureImportanceExplainer.setup()

- h2o_sonar.explainers.friedman_h_statistic_explainer module

- h2o_sonar.explainers.morris_sa_explainer module

- h2o_sonar.explainers.pd_2_features_explainer module

PdFor2FeaturesArgsPdFor2FeaturesExplainerPdFor2FeaturesExplainer.FILE_Y_HATPdFor2FeaturesExplainer.GRID_RESOLUTIONPdFor2FeaturesExplainer.MAX_FEATURESPdFor2FeaturesExplainer.OPT_ICE_1_FRAME_ENABLEDPdFor2FeaturesExplainer.PARAM_FEATURESPdFor2FeaturesExplainer.PARAM_GRID_RESOLUTIONPdFor2FeaturesExplainer.PARAM_MAX_FEATURESPdFor2FeaturesExplainer.PARAM_OOR_GRID_RESOLUTIONPdFor2FeaturesExplainer.PARAM_PLOT_TYPEPdFor2FeaturesExplainer.PARAM_QTILE_BINSPdFor2FeaturesExplainer.PARAM_QTILE_GRID_RESOLUTIONPdFor2FeaturesExplainer.PARAM_SAMPLE_SIZEPdFor2FeaturesExplainer.PROGRESS_MAXPdFor2FeaturesExplainer.PROGRESS_MINPdFor2FeaturesExplainer.SAMPLE_SIZEPdFor2FeaturesExplainer.check_compatibility()PdFor2FeaturesExplainer.explain()PdFor2FeaturesExplainer.get_result()PdFor2FeaturesExplainer.normalize()PdFor2FeaturesExplainer.setup()

- h2o_sonar.explainers.pd_ice_explainer module

PdIceArgsPdIceExplainerPdIceExplainer.FILE_ICE_JSONPdIceExplainer.FILE_PD_JSONPdIceExplainer.FILE_Y_HATPdIceExplainer.GRID_RESOLUTIONPdIceExplainer.KEY_BINSPdIceExplainer.KEY_LABELS_MAPPdIceExplainer.MAX_FEATURESPdIceExplainer.NUMCAT_NUM_CHARTPdIceExplainer.NUMCAT_THRESHOLDPdIceExplainer.OPT_ICE_1_FRAME_ENABLEDPdIceExplainer.PARAM_CENTERPdIceExplainer.PARAM_DEBUG_RESIDUALSPdIceExplainer.PARAM_FEATURESPdIceExplainer.PARAM_GRID_RESOLUTIONPdIceExplainer.PARAM_HISTOGRAMSPdIceExplainer.PARAM_MAX_FEATURESPdIceExplainer.PARAM_NUMCAT_NUM_CHARTPdIceExplainer.PARAM_NUMCAT_THRESHOLDPdIceExplainer.PARAM_OOR_GRID_RESOLUTIONPdIceExplainer.PARAM_QTILE_BINSPdIceExplainer.PARAM_QTILE_GRID_RESOLUTIONPdIceExplainer.PARAM_SAMPLE_SIZEPdIceExplainer.PARAM_SORT_BINSPdIceExplainer.PROGRESS_MAXPdIceExplainer.PROGRESS_MINPdIceExplainer.SAMPLE_SIZEPdIceExplainer.UPDATE_PARAM_NUMCAT_OVERRIDEPdIceExplainer.UPDATE_SCOPE_NUMCATPdIceExplainer.UPDATE_TYPE_ADD_FEATUREPdIceExplainer.UPDATE_TYPE_ADD_NUMCATPdIceExplainer.check_compatibility()PdIceExplainer.explain()PdIceExplainer.explain_global()PdIceExplainer.explain_local()PdIceExplainer.get_result()PdIceExplainer.normalize_data()PdIceExplainer.setup()

- h2o_sonar.explainers.residual_dt_surrogate_explainer module

ResidualDecisionTreeSurrogateExplainerResidualDecisionTreeSurrogateExplainer.PARAM_CAT_ENCODINGResidualDecisionTreeSurrogateExplainer.PARAM_DEBUG_RESIDUALSResidualDecisionTreeSurrogateExplainer.PARAM_DEBUG_RESIDUALS_CLASSResidualDecisionTreeSurrogateExplainer.PARAM_DT_DEPTHResidualDecisionTreeSurrogateExplainer.PARAM_NFOLDSResidualDecisionTreeSurrogateExplainer.PARAM_QBIN_COLSResidualDecisionTreeSurrogateExplainer.PARAM_QBIN_COUNTResidualDecisionTreeSurrogateExplainer.check_compatibility()ResidualDecisionTreeSurrogateExplainer.explain()ResidualDecisionTreeSurrogateExplainer.explain_problems()ResidualDecisionTreeSurrogateExplainer.get_result()ResidualDecisionTreeSurrogateExplainer.is_enabled()ResidualDecisionTreeSurrogateExplainer.setup()

- h2o_sonar.explainers.residual_pd_ice_explainer module

ResidualPdIceExplainerResidualPdIceExplainer.PARAM_CENTERResidualPdIceExplainer.PARAM_DEBUG_RESIDUALSResidualPdIceExplainer.PARAM_FEATURESResidualPdIceExplainer.PARAM_GRID_RESOLUTIONResidualPdIceExplainer.PARAM_HISTOGRAMSResidualPdIceExplainer.PARAM_MAX_FEATURESResidualPdIceExplainer.PARAM_NUMCAT_NUM_CHARTResidualPdIceExplainer.PARAM_NUMCAT_THRESHOLDResidualPdIceExplainer.PARAM_OOR_GRID_RESOLUTIONResidualPdIceExplainer.PARAM_QTILE_BINSResidualPdIceExplainer.PARAM_QTILE_GRID_RESOLUTIONResidualPdIceExplainer.PARAM_SAMPLE_SIZEResidualPdIceExplainer.PARAM_SORT_BINSResidualPdIceExplainer.check_compatibility()ResidualPdIceExplainer.explain()ResidualPdIceExplainer.explain_problems()ResidualPdIceExplainer.get_result()ResidualPdIceExplainer.setup()

- h2o_sonar.explainers.segment_performance_explainer module

SegmentPerformanceExplainerSegmentPerformanceExplainer.DEFAULT_DROP_COLSSegmentPerformanceExplainer.DEFAULT_NUMBER_OF_BINSSegmentPerformanceExplainer.DEFAULT_PRECISIONSegmentPerformanceExplainer.PARAM_DROP_COLSSegmentPerformanceExplainer.PARAM_NUMBER_OF_BINSSegmentPerformanceExplainer.PARAM_PRECISIONSegmentPerformanceExplainer.PARAM_WORKERSegmentPerformanceExplainer.RESULT_FILE_CSVSegmentPerformanceExplainer.ResultSegmentPerformanceExplainer.check_compatibility()SegmentPerformanceExplainer.explain()SegmentPerformanceExplainer.get_result()SegmentPerformanceExplainer.normalize_scatter_plot()SegmentPerformanceExplainer.normalize_to_gom()SegmentPerformanceExplainer.setup()

- h2o_sonar.explainers.size_dependency_explainer module

SizeDependencyExplainerSizeDependencyExplainer.DEFAULT_NUMBER_OF_SPLITSSizeDependencyExplainer.DEFAULT_TIME_COLUMNSizeDependencyExplainer.DEFAULT_WORKER_CLEANUPSizeDependencyExplainer.PARAM_NUMBER_OF_SPLITSSizeDependencyExplainer.PARAM_PLOT_TYPESizeDependencyExplainer.PARAM_TIME_COLUMNSizeDependencyExplainer.PARAM_WORKERSizeDependencyExplainer.PARAM_WORKER_CLEANUPSizeDependencyExplainer.check_compatibility()SizeDependencyExplainer.explain()SizeDependencyExplainer.get_result()SizeDependencyExplainer.setup()

- h2o_sonar.explainers.summary_shap_explainer module

PdIceArgsPdIceExplainerPdIceExplainer.FILE_ICE_JSONPdIceExplainer.FILE_PD_JSONPdIceExplainer.FILE_Y_HATPdIceExplainer.GRID_RESOLUTIONPdIceExplainer.KEY_BINSPdIceExplainer.KEY_LABELS_MAPPdIceExplainer.MAX_FEATURESPdIceExplainer.NUMCAT_NUM_CHARTPdIceExplainer.NUMCAT_THRESHOLDPdIceExplainer.OPT_ICE_1_FRAME_ENABLEDPdIceExplainer.PARAM_CENTERPdIceExplainer.PARAM_DEBUG_RESIDUALSPdIceExplainer.PARAM_FEATURESPdIceExplainer.PARAM_GRID_RESOLUTIONPdIceExplainer.PARAM_HISTOGRAMSPdIceExplainer.PARAM_MAX_FEATURESPdIceExplainer.PARAM_NUMCAT_NUM_CHARTPdIceExplainer.PARAM_NUMCAT_THRESHOLDPdIceExplainer.PARAM_OOR_GRID_RESOLUTIONPdIceExplainer.PARAM_QTILE_BINSPdIceExplainer.PARAM_QTILE_GRID_RESOLUTIONPdIceExplainer.PARAM_SAMPLE_SIZEPdIceExplainer.PARAM_SORT_BINSPdIceExplainer.PROGRESS_MAXPdIceExplainer.PROGRESS_MINPdIceExplainer.SAMPLE_SIZEPdIceExplainer.UPDATE_PARAM_NUMCAT_OVERRIDEPdIceExplainer.UPDATE_SCOPE_NUMCATPdIceExplainer.UPDATE_TYPE_ADD_FEATUREPdIceExplainer.UPDATE_TYPE_ADD_NUMCATPdIceExplainer.check_compatibility()PdIceExplainer.dataset_apiPdIceExplainer.dataset_metaPdIceExplainer.explain()PdIceExplainer.explain_global()PdIceExplainer.explain_local()PdIceExplainer.explainer_depsPdIceExplainer.explainer_paramsPdIceExplainer.explainer_params_as_strPdIceExplainer.get_result()PdIceExplainer.is_on_demand_pdPdIceExplainer.keyPdIceExplainer.log_namePdIceExplainer.loggerPdIceExplainer.mli_keyPdIceExplainer.modelPdIceExplainer.model_apiPdIceExplainer.model_metaPdIceExplainer.normalize_data()PdIceExplainer.paramsPdIceExplainer.persistencePdIceExplainer.problematic_featuresPdIceExplainer.setup()PdIceExplainer.testset_metaPdIceExplainer.validset_meta

- h2o_sonar.explainers.transformed_fi_shapley_explainer module

ShapleyMojoTransformedFeatureImportanceExplainerShapleyMojoTransformedFeatureImportanceExplainer.DEFAULT_FAST_APPROXShapleyMojoTransformedFeatureImportanceExplainer.OPT_BIN_1_CLASSShapleyMojoTransformedFeatureImportanceExplainer.check_compatibility()ShapleyMojoTransformedFeatureImportanceExplainer.explain()ShapleyMojoTransformedFeatureImportanceExplainer.get_result()ShapleyMojoTransformedFeatureImportanceExplainer.setup()

- h2o_sonar.explainers.adversarial_similarity_explainer module

- Module contents

- Submodules

- h2o_sonar.evaluators package

- Submodules

- h2o_sonar.evaluators.abc_byop_evaluator module

AbcByopEvaluatorAbcByopEvaluator.ClassesAbcByopEvaluator.IDENTIFIER_ACTUAL_OUTPUTAbcByopEvaluator.IDENTIFIER_CONTEXTAbcByopEvaluator.IDENTIFIER_EXPECTED_OUTPUTAbcByopEvaluator.IDENTIFIER_INPUTAbcByopEvaluator.KEY_ANSWERAbcByopEvaluator.KEY_ERRORAbcByopEvaluator.KEY_PARSED_ANSWERAbcByopEvaluator.KEY_PROMPTAbcByopEvaluator.PARAM_JUDGE_HOSTAbcByopEvaluator.PARAM_JUDGE_MODELAbcByopEvaluator.check_compatibility()AbcByopEvaluator.evaluate()AbcByopEvaluator.get_result()AbcByopEvaluator.judgeAbcByopEvaluator.setup()

- h2o_sonar.evaluators.bleu_evaluator module

- h2o_sonar.evaluators.classification_evaluator module

- h2o_sonar.evaluators.contact_information_byop_evaluator module

- h2o_sonar.evaluators.fairness_bias_evaluator module

- h2o_sonar.evaluators.gptscore_evaluator module

- h2o_sonar.evaluators.gptscore_machine_translation_evaluator module

- h2o_sonar.evaluators.gptscore_question_answering_evaluator module

GptScoreQuestionAnsweringEvaluatorGptScoreQuestionAnsweringEvaluator.METRIC_CORRECTNESSGptScoreQuestionAnsweringEvaluator.METRIC_ENGAGEMENTGptScoreQuestionAnsweringEvaluator.METRIC_FLUENCYGptScoreQuestionAnsweringEvaluator.METRIC_INTERESTGptScoreQuestionAnsweringEvaluator.METRIC_RELEVANCEGptScoreQuestionAnsweringEvaluator.METRIC_SEMANTICALLY_APPROPRIATEGptScoreQuestionAnsweringEvaluator.METRIC_SPECIFICGptScoreQuestionAnsweringEvaluator.METRIC_UNDERSTANDABILITYGptScoreQuestionAnsweringEvaluator.PARENT

- h2o_sonar.evaluators.gptscore_summary_without_reference_evaluator module

GptScoreSummaryWithoutReferenceEvaluatorGptScoreSummaryWithoutReferenceEvaluator.METRIC_COHERENCEGptScoreSummaryWithoutReferenceEvaluator.METRIC_CONSISTENCYGptScoreSummaryWithoutReferenceEvaluator.METRIC_FACTUALITYGptScoreSummaryWithoutReferenceEvaluator.METRIC_FLUENCYGptScoreSummaryWithoutReferenceEvaluator.METRIC_INFORMATIVENESSGptScoreSummaryWithoutReferenceEvaluator.METRIC_RELEVANCEGptScoreSummaryWithoutReferenceEvaluator.METRIC_SEMANTIC_COVERAGEGptScoreSummaryWithoutReferenceEvaluator.PARENT

- h2o_sonar.evaluators.gptscore_summary_with_reference_evaluator module

GptScoreSummaryWithReferenceEvaluatorGptScoreSummaryWithReferenceEvaluator.METRIC_COHERENCEGptScoreSummaryWithReferenceEvaluator.METRIC_FACTUALITYGptScoreSummaryWithReferenceEvaluator.METRIC_FLUENCYGptScoreSummaryWithReferenceEvaluator.METRIC_INFORMATIVENESSGptScoreSummaryWithReferenceEvaluator.METRIC_RELEVANCEGptScoreSummaryWithReferenceEvaluator.METRIC_SEMANTIC_COVERAGEGptScoreSummaryWithReferenceEvaluator.PARENT

- h2o_sonar.evaluators.language_mismatch_byop_evaluator module

- h2o_sonar.evaluators.parameterizable_byop_evaluator module

- h2o_sonar.evaluators.perplexity_evaluator module

- h2o_sonar.evaluators.pii_leakage_evaluator module

PiiLeakageEvaluatorPiiLeakageEvaluator.DEFAULT_EVAL_RCPiiLeakageEvaluator.PARAM_EVAL_RCPiiLeakageEvaluator.check_compatibility()PiiLeakageEvaluator.check_creditcard_leakage()PiiLeakageEvaluator.check_email_leakage()PiiLeakageEvaluator.check_ssn_leakage()PiiLeakageEvaluator.evaluate()PiiLeakageEvaluator.get_result()PiiLeakageEvaluator.setup()

- h2o_sonar.evaluators.rag_answer_correctness_evaluator module

- h2o_sonar.evaluators.rag_answer_relevancy_evaluator module

- h2o_sonar.evaluators.rag_answer_relevancy_no_judge_evaluator module

RagAnswerRelevancyNoJudgeEvaluatorRagAnswerRelevancyNoJudgeEvaluator.COL_ACTUAL_OUTPUTRagAnswerRelevancyNoJudgeEvaluator.COL_CONTEXTRagAnswerRelevancyNoJudgeEvaluator.COL_EXPECTED_OUTPUTRagAnswerRelevancyNoJudgeEvaluator.COL_INPUTRagAnswerRelevancyNoJudgeEvaluator.COL_MODELRagAnswerRelevancyNoJudgeEvaluator.COL_SCORERagAnswerRelevancyNoJudgeEvaluator.METRIC_ANSWER_RELEVANCYRagAnswerRelevancyNoJudgeEvaluator.check_compatibility()RagAnswerRelevancyNoJudgeEvaluator.evaluate()RagAnswerRelevancyNoJudgeEvaluator.get_result()RagAnswerRelevancyNoJudgeEvaluator.setup()RagAnswerRelevancyNoJudgeEvaluator.split_sentences()

- h2o_sonar.evaluators.rag_answer_similarity_evaluator module

- h2o_sonar.evaluators.rag_chunk_relevancy_evaluator module

ContextChunkRelevancyEvaluatorContextChunkRelevancyEvaluator.COL_ACTUAL_OUTPUTContextChunkRelevancyEvaluator.COL_CONTEXTContextChunkRelevancyEvaluator.COL_EXPECTED_OUTPUTContextChunkRelevancyEvaluator.COL_INPUTContextChunkRelevancyEvaluator.COL_MODELContextChunkRelevancyEvaluator.COL_SCOREContextChunkRelevancyEvaluator.METRIC_PRECISION_RELEVANCYContextChunkRelevancyEvaluator.METRIC_RECALL_RELEVANCYContextChunkRelevancyEvaluator.check_compatibility()ContextChunkRelevancyEvaluator.evaluate()ContextChunkRelevancyEvaluator.get_result()ContextChunkRelevancyEvaluator.setup()ContextChunkRelevancyEvaluator.split_sentences()

- h2o_sonar.evaluators.rag_context_precision_evaluator module

- h2o_sonar.evaluators.rag_context_recall_evaluator module

- h2o_sonar.evaluators.rag_context_relevancy_evaluator module

- h2o_sonar.evaluators.rag_faithfulness_evaluator module

- h2o_sonar.evaluators.rag_groundedness_evaluator module

RagGroundednessEvaluatorRagGroundednessEvaluator.COL_ACTUAL_OUTPUTRagGroundednessEvaluator.COL_CONTEXTRagGroundednessEvaluator.COL_EXPECTED_OUTPUTRagGroundednessEvaluator.COL_INPUTRagGroundednessEvaluator.COL_MODELRagGroundednessEvaluator.COL_SCORERagGroundednessEvaluator.METRIC_GROUNDEDNESSRagGroundednessEvaluator.check_compatibility()RagGroundednessEvaluator.evaluate()RagGroundednessEvaluator.get_result()RagGroundednessEvaluator.setup()RagGroundednessEvaluator.split_sentences()

- h2o_sonar.evaluators.rag_hallucination_evaluator module

RagHallucinationEvaluatorRagHallucinationEvaluator.COL_ACTUAL_OUTPUTRagHallucinationEvaluator.COL_CONTEXTRagHallucinationEvaluator.COL_EXPECTED_OUTPUTRagHallucinationEvaluator.COL_INPUTRagHallucinationEvaluator.COL_MODELRagHallucinationEvaluator.COL_SCORERagHallucinationEvaluator.DEFAULT_METRIC_THRESHOLDRagHallucinationEvaluator.METRIC_HALLUCINATIONRagHallucinationEvaluator.check_compatibility()RagHallucinationEvaluator.evaluate()RagHallucinationEvaluator.get_result()RagHallucinationEvaluator.setup()

- h2o_sonar.evaluators.rag_ragas_evaluator module

RagasEvaluatorRagasEvaluator.KEY_ANSWERRagasEvaluator.KEY_CONTEXTSRagasEvaluator.KEY_GROUND_TRUTHSRagasEvaluator.KEY_QUESTIONRagasEvaluator.METRIC_ANSWER_CORRECTNESSRagasEvaluator.METRIC_ANSWER_RELEVANCYRagasEvaluator.METRIC_ANSWER_SIMILARITYRagasEvaluator.METRIC_CONTEXT_PRECISIONRagasEvaluator.METRIC_CONTEXT_RECALLRagasEvaluator.METRIC_CONTEXT_RELEVANCYRagasEvaluator.METRIC_FAITHFULNESSRagasEvaluator.METRIC_META_ANSWER_CORRECTNESSRagasEvaluator.METRIC_META_ANSWER_RELEVANCYRagasEvaluator.METRIC_META_ANSWER_SIMILARITYRagasEvaluator.METRIC_META_CONTEXT_PRECISIONRagasEvaluator.METRIC_META_CONTEXT_RECALLRagasEvaluator.METRIC_META_CONTEXT_RELEVANCYRagasEvaluator.METRIC_META_FAITHFULNESSRagasEvaluator.METRIC_META_RAGASRagasEvaluator.METRIC_RAGASRagasEvaluator.check_compatibility()RagasEvaluator.eval_custom_metrics()RagasEvaluator.evaluate()RagasEvaluator.get_result()RagasEvaluator.setup()

- h2o_sonar.evaluators.rag_tokens_presence_evaluator module

- h2o_sonar.evaluators.rouge_evaluator module

- h2o_sonar.evaluators.sensitive_data_leakage_evaluator module

- h2o_sonar.evaluators.sexism_byop_evaluator module

- h2o_sonar.evaluators.stereotype_byop_evaluator module

- h2o_sonar.evaluators.summarization_byop_evaluator module

- h2o_sonar.evaluators.summarization_evaluator module

SummarizationEvaluatorSummarizationEvaluator.KEY_COMPLETENESSSummarizationEvaluator.KEY_FAITHFULNESS_CONVSummarizationEvaluator.KEY_FAITHFULNESS_ZSSummarizationEvaluator.calculate_scores()SummarizationEvaluator.check_compatibility()SummarizationEvaluator.e_model_baai_bgeSummarizationEvaluator.e_model_vitamin_cSummarizationEvaluator.evaluate()SummarizationEvaluator.get_result()SummarizationEvaluator.setup()SummarizationEvaluator.split_sentences()SummarizationEvaluator.summac_faith_score1()SummarizationEvaluator.summac_faith_score2()SummarizationEvaluator.summary_completeness_batch()

load_summac()pairwise_distances_wrapper()segment_calc()

- h2o_sonar.evaluators.toxicity_evaluator module

ToxicityEvaluatorToxicityEvaluator.DEFAULT_TOXICITY_METRIC_THRESHOLDToxicityEvaluator.METRIC_IDENTITY_ATTACKToxicityEvaluator.METRIC_INSULTToxicityEvaluator.METRIC_OBSCENEToxicityEvaluator.METRIC_SEVERE_TOXICITYToxicityEvaluator.METRIC_THREATToxicityEvaluator.METRIC_TOXICITYToxicityEvaluator.check_compatibility()ToxicityEvaluator.evaluate()ToxicityEvaluator.get_result()ToxicityEvaluator.setup()

- h2o_sonar.evaluators.abc_byop_evaluator module

- Module contents

- Submodules

- h2o_sonar.lib package

- Subpackages

- h2o_sonar.lib.api package

- Submodules

- h2o_sonar.lib.api.commons module

BrandingCommonInterpretationParamsCommonInterpretationParams.PARAM_DATASETCommonInterpretationParams.PARAM_DROP_COLSCommonInterpretationParams.PARAM_MODELCommonInterpretationParams.PARAM_MODELSCommonInterpretationParams.PARAM_PREDICTION_COLCommonInterpretationParams.PARAM_RESULTS_LOCATIONCommonInterpretationParams.PARAM_SAMPLE_NUM_ROWSCommonInterpretationParams.PARAM_TARGET_COLCommonInterpretationParams.PARAM_TESTSETCommonInterpretationParams.PARAM_USED_FEATURESCommonInterpretationParams.PARAM_USE_RAW_FEATURESCommonInterpretationParams.PARAM_VALIDSETCommonInterpretationParams.PARAM_WEIGHT_COLCommonInterpretationParams.clone()CommonInterpretationParams.describe_config_item()CommonInterpretationParams.describe_config_items()CommonInterpretationParams.dump()CommonInterpretationParams.load()CommonInterpretationParams.to_dict()

ConfigItemEvaluatorParamTypeEvaluatorToRunExperimentTypeExplainerFilterExplainerJobStatusExplainerJobStatus.ABORTED_BY_RESTARTExplainerJobStatus.ABORTED_BY_USERExplainerJobStatus.CANCELLEDExplainerJobStatus.FAILEDExplainerJobStatus.FINISHEDExplainerJobStatus.IN_PROGRESSExplainerJobStatus.RUNNINGExplainerJobStatus.SCHEDULEDExplainerJobStatus.SUCCESSExplainerJobStatus.SYNCINGExplainerJobStatus.TIMED_OUTExplainerJobStatus.UNKNOWNExplainerJobStatus.from_int()ExplainerJobStatus.is_job_failed()ExplainerJobStatus.is_job_finished()ExplainerJobStatus.is_job_running()ExplainerJobStatus.to_string()

ExplainerParamKeyExplainerParamKey.KEY_ALL_EXPLAINERS_PARAMSExplainerParamKey.KEY_DATASETExplainerParamKey.KEY_DESCR_PATHExplainerParamKey.KEY_EXPERIMENT_TYPEExplainerParamKey.KEY_E_DEPSExplainerParamKey.KEY_E_IDExplainerParamKey.KEY_E_JOB_KEYExplainerParamKey.KEY_E_PARAMSExplainerParamKey.KEY_FEATURES_METAExplainerParamKey.KEY_I_DATA_PATHExplainerParamKey.KEY_KWARGSExplainerParamKey.KEY_LEGACY_I_PARAMSExplainerParamKey.KEY_MODELExplainerParamKey.KEY_MODEL_TYPEExplainerParamKey.KEY_ON_DEMANDExplainerParamKey.KEY_ON_DEMAND_MLI_KEYExplainerParamKey.KEY_ON_DEMAND_PARAMSExplainerParamKey.KEY_PARAMSExplainerParamKey.KEY_RUN_KEYExplainerParamKey.KEY_TESTSETExplainerParamKey.KEY_USERExplainerParamKey.KEY_VALIDSETExplainerParamKey.KEY_WORKER_NAME

ExplainerParamTypeExplainerToRunExplanationScopeFilterEntryInterpretationParamTypeKeywordKeywordGroupKeywordGroupsLlmModelHostTypeLookAndFeelLookAndFeel.BLUE_THEMELookAndFeel.COLORMAP_BLUE_2_REDLookAndFeel.COLORMAP_WHITE_2_BLACKLookAndFeel.COLORMAP_YELLOW_2_BLACKLookAndFeel.COLOR_BLACKLookAndFeel.COLOR_DAI_GREENLookAndFeel.COLOR_H2OAI_YELLOWLookAndFeel.COLOR_HOT_ORANGELookAndFeel.COLOR_MATPLOTLIB_BLUELookAndFeel.COLOR_REDLookAndFeel.COLOR_WHITELookAndFeel.DRIVERLESS_AI_THEMELookAndFeel.FORMAT_HEXALookAndFeel.H2O_SONAR_THEMELookAndFeel.KEY_LFLookAndFeel.THEME_2_BG_COLORLookAndFeel.THEME_2_COLORMAPLookAndFeel.THEME_2_FG_COLORLookAndFeel.THEME_2_LINE_COLORLookAndFeel.get_bg_color()LookAndFeel.get_colormap()LookAndFeel.get_fg_color()LookAndFeel.get_line_color()

MetricMetaMetricMeta.DATA_TYPE_SECONDSMetricMeta.EFFECTIVE_INFMetricMeta.KEY_DATA_TYPEMetricMeta.KEY_DESCRIPTIONMetricMeta.KEY_DISPLAY_FORMATMetricMeta.KEY_DISPLAY_NAMEMetricMeta.KEY_EXCLUDEMetricMeta.KEY_HIGHER_IS_BETTERMetricMeta.KEY_IS_PRIMARY_METRICMetricMeta.KEY_KEYMetricMeta.KEY_PARENT_METRICMetricMeta.KEY_THRESHOLDMetricMeta.KEY_VALUE_ENUMMetricMeta.KEY_VALUE_RANGEMetricMeta.copy()MetricMeta.dump()MetricMeta.from_dict()MetricMeta.load()MetricMeta.to_dict()MetricMeta.to_md()

MetricsMetaMetricsMeta.KEY_METAMetricsMeta.add_metric()MetricsMeta.contains()MetricsMeta.copy_with_overrides()MetricsMeta.dump()MetricsMeta.from_dict()MetricsMeta.get_metric()MetricsMeta.get_metric_best_value()MetricsMeta.get_metric_description()MetricsMeta.get_metric_keys()MetricsMeta.get_metric_worst_value()MetricsMeta.get_primary_metric()MetricsMeta.get_threshold()MetricsMeta.is_higher_better()MetricsMeta.is_metric_passed()MetricsMeta.load()MetricsMeta.set_threshold()MetricsMeta.size()MetricsMeta.to_dict()MetricsMeta.to_list()

MimeTypeMimeType.EXT_CSVMimeType.EXT_DATATABLEMimeType.EXT_DOCXMimeType.EXT_HTMLMimeType.EXT_JPGMimeType.EXT_JSONMimeType.EXT_MARKDOWNMimeType.EXT_PNGMimeType.EXT_SVGMimeType.EXT_TEXTMimeType.EXT_ZIPMimeType.MIME_CSVMimeType.MIME_DATATABLEMimeType.MIME_DOCXMimeType.MIME_EVALSTUDIO_MARKDOWNMimeType.MIME_HTMLMimeType.MIME_IMAGEMimeType.MIME_JPGMimeType.MIME_JSONMimeType.MIME_JSON_CSVMimeType.MIME_JSON_DATATABLEMimeType.MIME_MARKDOWNMimeType.MIME_MODEL_PIPELINEMimeType.MIME_PDFMimeType.MIME_PNGMimeType.MIME_SVGMimeType.MIME_TEXTMimeType.MIME_ZIPMimeType.ext_for_mime()

ModelTypeExplanationParamParamTypePerturbationIntensityPerturbatorToRunResourceHandleResourceLocatorTypeSafeJavaScriptSemVerUpdateGlobalExplanationUpdateGlobalExplanation.OPT_CLASSUpdateGlobalExplanation.OPT_FEATUREUpdateGlobalExplanation.OPT_INHERITUpdateGlobalExplanation.OPT_MERGEUpdateGlobalExplanation.OPT_REPLACEUpdateGlobalExplanation.OPT_REQUESTUpdateGlobalExplanation.PARAMS_SOURCEUpdateGlobalExplanation.UPDATE_MODEUpdateGlobalExplanation.UPDATE_SCOPE

add_string_list()base_pkg()generate_key()harmonic_mean()is_ncname()is_port_used()is_valid_key()

- h2o_sonar.lib.api.datasets module

DatasetApiExplainableColumnMetaExplainableDatasetExplainableDataset.COL_BIASExplainableDataset.KEY_DATAExplainableDataset.KEY_METADATAExplainableDataset.dataExplainableDataset.frame_2_datatable()ExplainableDataset.frame_2_numpy()ExplainableDataset.frame_2_pandas()ExplainableDataset.is_bias_col()ExplainableDataset.metaExplainableDataset.prepare()ExplainableDataset.sample()ExplainableDataset.to_dict()ExplainableDataset.to_json()ExplainableDataset.transform()

ExplainableDatasetHandleExplainableDatasetMetaExplainableDatasetMeta.KEY_COLUMNS_CATExplainableDatasetMeta.KEY_COLUMNS_METAExplainableDatasetMeta.KEY_COLUMNS_NUMExplainableDatasetMeta.KEY_COLUMN_NAMESExplainableDatasetMeta.KEY_COLUMN_TYPESExplainableDatasetMeta.KEY_COLUMN_UNIQUESExplainableDatasetMeta.KEY_FILE_NAMEExplainableDatasetMeta.KEY_FILE_PATHExplainableDatasetMeta.KEY_FILE_SIZEExplainableDatasetMeta.KEY_MISSING_VALUESExplainableDatasetMeta.KEY_ORIGINAL_DATASET_PATHExplainableDatasetMeta.KEY_ORIGINAL_DATASET_SAMPLEDExplainableDatasetMeta.KEY_ORIGINAL_DATASET_SHAPEExplainableDatasetMeta.KEY_ORIGINAL_DATASET_SIZEExplainableDatasetMeta.KEY_ROW_COUNTExplainableDatasetMeta.KEY_SHAPEExplainableDatasetMeta.copy()ExplainableDatasetMeta.get_column_meta()ExplainableDatasetMeta.has_column()ExplainableDatasetMeta.is_categorical_column()ExplainableDatasetMeta.is_numeric_column()ExplainableDatasetMeta.to_dict()ExplainableDatasetMeta.to_json()

ExplainableDatasetTypeExplainableDatatableDatasetLlmDatasetLlmDataset.COLUMNSLlmDataset.COL_ACTUAL_DURATIONLlmDataset.COL_ACTUAL_OUTPUTLlmDataset.COL_CATEGORIESLlmDataset.COL_CONTEXTLlmDataset.COL_CORPUSLlmDataset.COL_COSTLlmDataset.COL_EXPECTED_OUTPUTLlmDataset.COL_INPUTLlmDataset.COL_MODEL_KEYLlmDataset.COL_OUTPUT_CONDITIONLlmDataset.COL_OUTPUT_CONSTRAINTSLlmDataset.COL_RELATIONSHIPSLlmDataset.COL_TEST_KEYLlmDataset.KEY_ACTUAL_DURATIONLlmDataset.KEY_ACTUAL_OUTPUTLlmDataset.KEY_CATEGORIESLlmDataset.KEY_CONTEXTLlmDataset.KEY_CORPUSLlmDataset.KEY_COSTLlmDataset.KEY_EXPECTED_OUTPUTLlmDataset.KEY_INPUTLlmDataset.KEY_INPUTSLlmDataset.KEY_KEYLlmDataset.KEY_MODEL_KEYLlmDataset.KEY_OUTPUT_CONDITIONLlmDataset.KEY_OUTPUT_CONSTRAINTSLlmDataset.KEY_RELATIONSHIPSLlmDataset.KEY_TC_KEYLlmDataset.KEY_TEST_KEYLlmDataset.LlmDatasetRowLlmDataset.add_input()LlmDataset.from_datatable_dict()LlmDataset.from_datatable_json_enc_col()LlmDataset.from_dict()LlmDataset.load_from_json()LlmDataset.merge()LlmDataset.perturb()LlmDataset.prompts()LlmDataset.save_as_json()LlmDataset.shape()LlmDataset.stats()LlmDataset.to_datatable()LlmDataset.to_datatable_dict()LlmDataset.to_dict()

LlmEvalResultsLlmEvalResults.COL_ACTUAL_OUTPUT_METALlmEvalResults.KEY_RESULTSLlmEvalResults.LlmEvalResultRowLlmEvalResults.add_result()LlmEvalResults.from_dict()LlmEvalResults.load_from_json()LlmEvalResults.prompts()LlmEvalResults.save_as_json()LlmEvalResults.shape()LlmEvalResults.to_datatable()LlmEvalResults.to_datatable_dict()LlmEvalResults.to_dict()LlmEvalResults.to_llm_dataset()

LlmInputRelLlmInputRelTargetTypeLlmInputRelTypeLlmPromptCategoriesLlmPromptCategories.classificationLlmPromptCategories.codingLlmPromptCategories.evaluationLlmPromptCategories.factsLlmPromptCategories.harmLlmPromptCategories.knowledgeLlmPromptCategories.mathLlmPromptCategories.planningLlmPromptCategories.question_answeringLlmPromptCategories.reasoningLlmPromptCategories.recommendationLlmPromptCategories.summarizationLlmPromptCategories.troubleshootingLlmPromptCategories.unknownLlmPromptCategories.writing

filter_importance_greater_than_zero()

- h2o_sonar.lib.api.explainers module

ExplainerExplainer.ARG_EXPLAINER_PARAMSExplainer.EXPLAINERS_PURPOSESExplainer.KEYWORD_COMPLIANCE_TESTExplainer.KEYWORD_DEFAULTExplainer.KEYWORD_EVALUATES_LLMExplainer.KEYWORD_EVALUATES_RAGExplainer.KEYWORD_EXPLAINS_APPROX_BEHAVIORExplainer.KEYWORD_EXPLAINS_DATASETExplainer.KEYWORD_EXPLAINS_FAIRNESSExplainer.KEYWORD_EXPLAINS_FEATURE_BEHAVIORExplainer.KEYWORD_EXPLAINS_MODEL_DEBUGGINGExplainer.KEYWORD_EXPLAINS_O_FEATURE_IMPORTANCEExplainer.KEYWORD_EXPLAINS_T_FEATURE_IMPORTANCEExplainer.KEYWORD_EXPLAINS_UNKNOWNExplainer.KEYWORD_H2O_MODEL_VALIDATIONExplainer.KEYWORD_H2O_SONARExplainer.KEYWORD_IS_FASTExplainer.KEYWORD_IS_SLOWExplainer.KEYWORD_LLMExplainer.KEYWORD_MOCKExplainer.KEYWORD_NLPExplainer.KEYWORD_PREFIX_CAPABILITYExplainer.KEYWORD_PREFIX_EXPLAINSExplainer.KEYWORD_PROXYExplainer.KEYWORD_REQUIRES_H2O3Explainer.KEYWORD_REQUIRES_OPENAI_KEYExplainer.KEYWORD_RQ_AAExplainer.KEYWORD_RQ_CExplainer.KEYWORD_RQ_EAExplainer.KEYWORD_RQ_JExplainer.KEYWORD_RQ_PExplainer.KEYWORD_RQ_RCExplainer.KEYWORD_TEMPLATEExplainer.KEYWORD_UNLISTEDExplainer.add_insight()Explainer.add_problem()Explainer.as_descriptor()Explainer.brief_descriptionExplainer.can_explain()Explainer.check_compatibility()Explainer.check_required_modules()Explainer.class_brief_description()Explainer.class_description()Explainer.class_display_name()Explainer.class_nameExplainer.class_tagline()Explainer.create_explanation_workdir_archive()Explainer.dependenciesExplainer.depends_on()Explainer.descriptionExplainer.destroy()Explainer.display_nameExplainer.evaluator_id()Explainer.exlainer_params_as_dict()Explainer.expected_custom_classExplainer.explain()Explainer.explain_global()Explainer.explain_insights()Explainer.explain_local()Explainer.explain_problems()Explainer.explainer_id()Explainer.explainer_version()Explainer.explains_binary()Explainer.explains_multiclass()Explainer.explains_regression()Explainer.explanationsExplainer.fit()Explainer.get_explanations()Explainer.get_result()Explainer.has_explanation_scopes()Explainer.has_explanation_types()Explainer.has_explanations()Explainer.has_model_type_explanations()Explainer.is_enabled()Explainer.is_iid()Explainer.is_image()Explainer.is_llm()Explainer.is_rag()Explainer.is_time_series()Explainer.is_unsupervised()Explainer.keywordsExplainer.load()Explainer.load_descriptor()Explainer.metrics_meta()Explainer.parameters()Explainer.priority()Explainer.report_progress()Explainer.requires_model()Explainer.requires_predict_method()Explainer.requires_preloaded_predictor()Explainer.run_explain()Explainer.run_explain_global()Explainer.run_explain_local()Explainer.run_fit()Explainer.save()Explainer.save_descriptor()Explainer.setup()Explainer.supports_dataset_locator()Explainer.supports_model_locator()Explainer.taglineExplainer.validate_explanations()Explainer.working_dir

ExplainerArgsExplainerDescriptorExplainerDescriptor.KEY_BRIEF_DESCRIPTIONExplainerDescriptor.KEY_CAN_EXPLAINExplainerDescriptor.KEY_DESCRIPTIONExplainerDescriptor.KEY_DISPLAY_NAMEExplainerDescriptor.KEY_EXPLANATIONSExplainerDescriptor.KEY_EXPLANATION_SCOPESExplainerDescriptor.KEY_IDExplainerDescriptor.KEY_KEYWORDSExplainerDescriptor.KEY_METRICS_METAExplainerDescriptor.KEY_MODEL_TYPESExplainerDescriptor.KEY_NAMEExplainerDescriptor.KEY_PARAMETERSExplainerDescriptor.KEY_TAGLINEExplainerDescriptor.clone()ExplainerDescriptor.dump()ExplainerDescriptor.load()

ExplainerParamExplainerRegistryExplainerResultOnDemandExplainKeyOnDemandExplainMethodSurrogateExplainer

- h2o_sonar.lib.api.explanations module

AbcHeatmapExplanationAutoReportExplanationCustomArchiveExplanationDiaExplanationDurationStatsKeyExplanationExplanation.DISPLAY_CAT_AUTOREPORTExplanation.DISPLAY_CAT_COMPLIANCEExplanation.DISPLAY_CAT_CUSTOMExplanation.DISPLAY_CAT_DAI_MODELExplanation.DISPLAY_CAT_DATAExplanation.DISPLAY_CAT_EXAMPLEExplanation.DISPLAY_CAT_LLMExplanation.DISPLAY_CAT_MOCKExplanation.DISPLAY_CAT_MODELExplanation.DISPLAY_CAT_NLPExplanation.DISPLAY_CAT_SURROGATESExplanation.DISPLAY_CAT_SURROGATES_ON_RESExplanation.DISPLAY_CAT_TEMPLATEExplanation.add_format()Explanation.as_class_descriptor()Explanation.as_descriptor()Explanation.display_categoryExplanation.display_nameExplanation.explainerExplanation.explanation_scope()Explanation.explanation_type()Explanation.format_typesExplanation.get_format()Explanation.has_localExplanation.is_global()Explanation.validate()

ExplanationDescriptorFlippedPerturbedTestCaseGlobal3dDataExplanationGlobalDataFrameExplanationGlobalDtExplanationGlobalFeatImpExplanationGlobalGroupedBarChartExplanationGlobalHtmlFragmentExplanationGlobalLinePlotExplanationGlobalNlpLocoExplanationGlobalRuleExplanationGlobalScatterPlotExplanationGlobalSummaryFeatImpExplanationIndividualConditionalExplanationLlmBoolLeaderboardExplanationLlmBoolLeaderboardExplanation.AdditionalDetailsLlmBoolLeaderboardExplanation.DEFAULT_METRIC_THRESHOLDLlmBoolLeaderboardExplanation.FailureLlmBoolLeaderboardExplanation.Failure.actual_outputLlmBoolLeaderboardExplanation.Failure.actual_output_metaLlmBoolLeaderboardExplanation.Failure.ctx_bytesLlmBoolLeaderboardExplanation.Failure.ctx_chunksLlmBoolLeaderboardExplanation.Failure.doc_urlLlmBoolLeaderboardExplanation.Failure.error_messageLlmBoolLeaderboardExplanation.Failure.expected_outputLlmBoolLeaderboardExplanation.Failure.fail_generationLlmBoolLeaderboardExplanation.Failure.fail_parseLlmBoolLeaderboardExplanation.Failure.fail_retrievalLlmBoolLeaderboardExplanation.Failure.inputLlmBoolLeaderboardExplanation.Failure.model_keyLlmBoolLeaderboardExplanation.Failure.output_conditionLlmBoolLeaderboardExplanation.Failure.output_constraintsLlmBoolLeaderboardExplanation.Failure.row_key

LlmBoolLeaderboardExplanation.KEY_INPUT_FAILURESLlmBoolLeaderboardExplanation.KEY_INPUT_FAILURES_COUNTLlmBoolLeaderboardExplanation.KEY_INPUT_FAILURES_GENERATION_COUNTLlmBoolLeaderboardExplanation.KEY_INPUT_FAILURES_PARSE_COUNTLlmBoolLeaderboardExplanation.KEY_INPUT_FAILURES_RETRIEVAL_COUNTLlmBoolLeaderboardExplanation.KEY_INPUT_PASSES_COUNTLlmBoolLeaderboardExplanation.KEY_MODEL_FAILURESLlmBoolLeaderboardExplanation.KEY_MODEL_FAILURES_COUNTLlmBoolLeaderboardExplanation.KEY_MODEL_FAILURES_GENERATION_COUNTLlmBoolLeaderboardExplanation.KEY_MODEL_FAILURES_PARSE_COUNTLlmBoolLeaderboardExplanation.KEY_MODEL_FAILURES_RETRIEVAL_COUNTLlmBoolLeaderboardExplanation.KEY_MODEL_PASSES_COUNTLlmBoolLeaderboardExplanation.KEY_RESULT_CHECK_ERR_MSGLlmBoolLeaderboardExplanation.KEY_RESULT_CHECK_FAILLlmBoolLeaderboardExplanation.KEY_RESULT_CHECK_FAIL_ALlmBoolLeaderboardExplanation.KEY_RESULT_CHECK_FAIL_PLlmBoolLeaderboardExplanation.KEY_RESULT_CHECK_FAIL_RLlmBoolLeaderboardExplanation.KEY_RESULT_CHECK_OKLlmBoolLeaderboardExplanation.KEY_TOTAL_COSTLlmBoolLeaderboardExplanation.KEY_TOTAL_TIMELlmBoolLeaderboardExplanation.LEADERBOARD_METRICS_METALlmBoolLeaderboardExplanation.METRIC_META_MODEL_FAILURESLlmBoolLeaderboardExplanation.METRIC_META_MODEL_GENERATION_FAILURESLlmBoolLeaderboardExplanation.METRIC_META_MODEL_PARSE_FAILURESLlmBoolLeaderboardExplanation.METRIC_META_MODEL_PASSESLlmBoolLeaderboardExplanation.METRIC_META_MODEL_RETRIEVAL_FAILURESLlmBoolLeaderboardExplanation.METRIC_MODEL_FAILURESLlmBoolLeaderboardExplanation.METRIC_MODEL_GENERATION_FAILURESLlmBoolLeaderboardExplanation.METRIC_MODEL_PARSE_FAILURESLlmBoolLeaderboardExplanation.METRIC_MODEL_PASSESLlmBoolLeaderboardExplanation.METRIC_MODEL_RETRIEVAL_FAILURESLlmBoolLeaderboardExplanation.add_evalstudio_markdown_format()LlmBoolLeaderboardExplanation.add_failure()LlmBoolLeaderboardExplanation.add_json_format()LlmBoolLeaderboardExplanation.add_markdown_format()LlmBoolLeaderboardExplanation.add_pass()LlmBoolLeaderboardExplanation.add_total_cost()LlmBoolLeaderboardExplanation.add_total_time()LlmBoolLeaderboardExplanation.as_dict()LlmBoolLeaderboardExplanation.as_evalstudio_markdown()LlmBoolLeaderboardExplanation.as_html()LlmBoolLeaderboardExplanation.as_leaderboard_dict()LlmBoolLeaderboardExplanation.as_markdown()LlmBoolLeaderboardExplanation.build()LlmBoolLeaderboardExplanation.check_and_report_negative_cost()LlmBoolLeaderboardExplanation.evaluation_cost()LlmBoolLeaderboardExplanation.from_eval_results()LlmBoolLeaderboardExplanation.get_insights()LlmBoolLeaderboardExplanation.key_2_rag_type_prefix()LlmBoolLeaderboardExplanation.sort_models_leaderboard()LlmBoolLeaderboardExplanation.sort_prompts_by_empty_ctxs()LlmBoolLeaderboardExplanation.sort_prompts_by_failures()LlmBoolLeaderboardExplanation.summary_as_markdown()LlmBoolLeaderboardExplanation.validate()

LlmClassifierLeaderboardExplanationLlmClassifierLeaderboardExplanation.DEFAULT_METRIC_THRESHOLDLlmClassifierLeaderboardExplanation.METRIC_ACCURACYLlmClassifierLeaderboardExplanation.METRIC_F1LlmClassifierLeaderboardExplanation.METRIC_META_ACCURACYLlmClassifierLeaderboardExplanation.METRIC_META_F1LlmClassifierLeaderboardExplanation.METRIC_META_PRECISIONLlmClassifierLeaderboardExplanation.METRIC_META_RECALLLlmClassifierLeaderboardExplanation.METRIC_PRECISIONLlmClassifierLeaderboardExplanation.METRIC_RECALLLlmClassifierLeaderboardExplanation.add_evalstudio_markdown_format()LlmClassifierLeaderboardExplanation.add_json_format()LlmClassifierLeaderboardExplanation.add_markdown_format()LlmClassifierLeaderboardExplanation.as_dict()LlmClassifierLeaderboardExplanation.as_html()LlmClassifierLeaderboardExplanation.as_markdown()LlmClassifierLeaderboardExplanation.build()LlmClassifierLeaderboardExplanation.from_eval_results()LlmClassifierLeaderboardExplanation.get_insights()LlmClassifierLeaderboardExplanation.sort_prompts_by_failures()LlmClassifierLeaderboardExplanation.validate()

LlmEvalResultsExplanationLlmHeatmapLeaderboardExplanationLlmHeatmapLeaderboardExplanation.LLM_MODEL_ANONYMOUSLlmHeatmapLeaderboardExplanation.add_col_value()LlmHeatmapLeaderboardExplanation.add_evalstudio_markdown_format()LlmHeatmapLeaderboardExplanation.add_json_format()LlmHeatmapLeaderboardExplanation.add_markdown_format()LlmHeatmapLeaderboardExplanation.as_dict()LlmHeatmapLeaderboardExplanation.as_html()LlmHeatmapLeaderboardExplanation.as_markdown()LlmHeatmapLeaderboardExplanation.build()LlmHeatmapLeaderboardExplanation.from_eval_results()LlmHeatmapLeaderboardExplanation.get_insights()LlmHeatmapLeaderboardExplanation.sort_prompts_by_failures()LlmHeatmapLeaderboardExplanation.truncate()LlmHeatmapLeaderboardExplanation.validate()

LlmLeaderboardExplanationLlmProcedureEvalLeaderboardExplanationLlmProcedureEvalLeaderboardExplanation.KEY_ALIGNMENT_MATRIXLlmProcedureEvalLeaderboardExplanation.KEY_DYN_PROG_MATRIXLlmProcedureEvalLeaderboardExplanation.LLM_MODEL_ANONYMOUSLlmProcedureEvalLeaderboardExplanation.add_col_value()LlmProcedureEvalLeaderboardExplanation.add_evalstudio_markdown_format()LlmProcedureEvalLeaderboardExplanation.add_json_format()LlmProcedureEvalLeaderboardExplanation.add_markdown_format()LlmProcedureEvalLeaderboardExplanation.as_dict()LlmProcedureEvalLeaderboardExplanation.as_html()LlmProcedureEvalLeaderboardExplanation.as_markdown()LlmProcedureEvalLeaderboardExplanation.build()LlmProcedureEvalLeaderboardExplanation.from_eval_results()LlmProcedureEvalLeaderboardExplanation.get_insights()LlmProcedureEvalLeaderboardExplanation.sort_prompts_by_failures()LlmProcedureEvalLeaderboardExplanation.truncate()LlmProcedureEvalLeaderboardExplanation.validate()

LocalDataFrameExplanationLocalDtExplanationLocalFeatImpExplanationLocalHtmlSnippetExplanationLocalNlpLocoExplanationLocalRuleExplanationLocalSummaryFeatImpExplanationLocalTextSnippetExplanationLocoExplanationModelValidationResultExplanationNlpTokenizerExplanationOnDemandExplanationPartialDependenceExplanationProxyExplanationReportExplanationSaExplanationTextExplanationTimeSeriesAppExplanationWorkDirArchiveExplanationdiagnose_perturbation_flips()

- h2o_sonar.lib.api.formats module

CsvFormatCustomExplanationFormatCustomArchiveZipFormatCustomCsvFormatCustomJsonFormatDatatableCustomExplanationFormatDiaTextFormatDocxFormatEvalStudioMarkdownFormatExplanationFormatExplanationFormat.DEFAULT_PAGE_SIZEExplanationFormat.FEATURE_TYPE_CATExplanationFormat.FEATURE_TYPE_CAT_NUMExplanationFormat.FEATURE_TYPE_DATEExplanationFormat.FEATURE_TYPE_DATETIMEExplanationFormat.FEATURE_TYPE_NUMExplanationFormat.FEATURE_TYPE_TIMEExplanationFormat.FILE_PREFIX_EXPLANATION_IDXExplanationFormat.KEYWORD_RESIDUALSExplanationFormat.KEY_ACTIONExplanationFormat.KEY_ACTION_TYPEExplanationFormat.KEY_ACTUALExplanationFormat.KEY_BIASExplanationFormat.KEY_CATEGORICALExplanationFormat.KEY_DATAExplanationFormat.KEY_DATA_HISTOGRAMExplanationFormat.KEY_DATA_HISTOGRAM_CATExplanationFormat.KEY_DATA_HISTOGRAM_NUMExplanationFormat.KEY_DATEExplanationFormat.KEY_DATE_TIMEExplanationFormat.KEY_DEFAULT_CLASSExplanationFormat.KEY_DOCExplanationFormat.KEY_EXPLAINER_JOB_KEYExplanationFormat.KEY_FEATURESExplanationFormat.KEY_FEATURE_TYPEExplanationFormat.KEY_FEATURE_VALUEExplanationFormat.KEY_FILESExplanationFormat.KEY_FILES_DETAILSExplanationFormat.KEY_FILES_NUMCAT_ASPECTExplanationFormat.KEY_FULLNAMEExplanationFormat.KEY_IDExplanationFormat.KEY_IS_MULTIExplanationFormat.KEY_ITEM_ORDERExplanationFormat.KEY_KEYWORDSExplanationFormat.KEY_LABELExplanationFormat.KEY_METADATAExplanationFormat.KEY_METRICSExplanationFormat.KEY_MIMEExplanationFormat.KEY_MLI_KEYExplanationFormat.KEY_NAMEExplanationFormat.KEY_NUMERICExplanationFormat.KEY_ON_DEMANDExplanationFormat.KEY_ON_DEMAND_PARAMSExplanationFormat.KEY_PAGE_OFFSETExplanationFormat.KEY_PAGE_SIZEExplanationFormat.KEY_RAW_FEATURESExplanationFormat.KEY_ROWS_PER_PAGEExplanationFormat.KEY_RUNNING_ACTIONExplanationFormat.KEY_SCOPEExplanationFormat.KEY_SYNC_ON_DEMANDExplanationFormat.KEY_TIMEExplanationFormat.KEY_TOTAL_ROWSExplanationFormat.KEY_VALUEExplanationFormat.KEY_Y_FILEExplanationFormat.LABEL_REGRESSIONExplanationFormat.SCOPE_GLOBALExplanationFormat.SCOPE_LOCALExplanationFormat.add_data()ExplanationFormat.add_file()ExplanationFormat.explanationExplanationFormat.file_namesExplanationFormat.get_data()ExplanationFormat.get_local_explanation()ExplanationFormat.get_page()ExplanationFormat.index_file_nameExplanationFormat.is_on_demand()ExplanationFormat.is_paged()ExplanationFormat.load_meta()ExplanationFormat.mimeExplanationFormat.update_data()

ExplanationFormatUtilsGlobal3dDataJSonCsvFormatGlobal3dDataJSonFormatGlobalDtJSonFormatGlobalDtJSonFormat.KEY_CHILDRENGlobalDtJSonFormat.KEY_EDGE_INGlobalDtJSonFormat.KEY_EDGE_WEIGHTGlobalDtJSonFormat.KEY_KEYGlobalDtJSonFormat.KEY_LEAF_PATHGlobalDtJSonFormat.KEY_NAMEGlobalDtJSonFormat.KEY_PARENTGlobalDtJSonFormat.KEY_TOTAL_WEIGHTGlobalDtJSonFormat.KEY_WEIGHTGlobalDtJSonFormat.TreeNodeGlobalDtJSonFormat.load_index_file()GlobalDtJSonFormat.mimeGlobalDtJSonFormat.serialize_data_file()GlobalDtJSonFormat.serialize_index_file()GlobalDtJSonFormat.validate_data()

GlobalFeatImpDatatableFormatGlobalFeatImpJSonCsvFormatGlobalFeatImpJSonDatatableFormatGlobalFeatImpJSonDatatableFormat.COL_GLOBAL_SCOPEGlobalFeatImpJSonDatatableFormat.COL_IMPORTANCEGlobalFeatImpJSonDatatableFormat.COL_NAMEGlobalFeatImpJSonDatatableFormat.add_data_frame()GlobalFeatImpJSonDatatableFormat.dict_to_data_frame()GlobalFeatImpJSonDatatableFormat.from_lists()GlobalFeatImpJSonDatatableFormat.get_data()GlobalFeatImpJSonDatatableFormat.get_page()GlobalFeatImpJSonDatatableFormat.is_paged()GlobalFeatImpJSonDatatableFormat.mimeGlobalFeatImpJSonDatatableFormat.serialize_index_file()GlobalFeatImpJSonDatatableFormat.validate_data()

GlobalFeatImpJSonFormatGlobalFeatImpJSonFormat.KEY_LABELGlobalFeatImpJSonFormat.KEY_VALUEGlobalFeatImpJSonFormat.from_dataframe_to_json()GlobalFeatImpJSonFormat.from_json_datatable()GlobalFeatImpJSonFormat.get_global_explanation()GlobalFeatImpJSonFormat.load_index_file()GlobalFeatImpJSonFormat.mimeGlobalFeatImpJSonFormat.serialize_data_file()GlobalFeatImpJSonFormat.serialize_index_file()GlobalFeatImpJSonFormat.validate_data()

GlobalGroupedBarChartJSonDatatableFormatGlobalGroupedBarChartJSonDatatableFormat.COL_XGlobalGroupedBarChartJSonDatatableFormat.COL_Y_GROUP_1GlobalGroupedBarChartJSonDatatableFormat.COL_Y_GROUP_2GlobalGroupedBarChartJSonDatatableFormat.add_data_frame()GlobalGroupedBarChartJSonDatatableFormat.get_data()GlobalGroupedBarChartJSonDatatableFormat.is_paged()GlobalGroupedBarChartJSonDatatableFormat.load_index_file()GlobalGroupedBarChartJSonDatatableFormat.mimeGlobalGroupedBarChartJSonDatatableFormat.serialize_index_file()GlobalGroupedBarChartJSonDatatableFormat.validate_data()

GlobalLinePlotJSonFormatGlobalNlpLocoJSonFormatGlobalNlpLocoJSonFormat.FILTER_TYPE_TEXT_FEATURESGlobalNlpLocoJSonFormat.KEY_DESCRIPTIONGlobalNlpLocoJSonFormat.KEY_FILTERSGlobalNlpLocoJSonFormat.KEY_LABELGlobalNlpLocoJSonFormat.KEY_NAMEGlobalNlpLocoJSonFormat.KEY_TYPEGlobalNlpLocoJSonFormat.KEY_VALUEGlobalNlpLocoJSonFormat.KEY_VALUESGlobalNlpLocoJSonFormat.from_dataframe_to_json()GlobalNlpLocoJSonFormat.from_json_datatable()GlobalNlpLocoJSonFormat.get_global_explanation()GlobalNlpLocoJSonFormat.get_page()GlobalNlpLocoJSonFormat.is_paged()GlobalNlpLocoJSonFormat.load_index_file()GlobalNlpLocoJSonFormat.mimeGlobalNlpLocoJSonFormat.serialize_data_file()GlobalNlpLocoJSonFormat.serialize_index_file()GlobalNlpLocoJSonFormat.validate_data()

GlobalScatterPlotJSonFormatGlobalSummaryFeatImpJsonDatatableFormatGlobalSummaryFeatImpJsonDatatableFormat.KEY_FEATUREGlobalSummaryFeatImpJsonDatatableFormat.KEY_FREQUENCYGlobalSummaryFeatImpJsonDatatableFormat.KEY_HIGH_VALUEGlobalSummaryFeatImpJsonDatatableFormat.KEY_ORDERGlobalSummaryFeatImpJsonDatatableFormat.KEY_SHAPLEYGlobalSummaryFeatImpJsonDatatableFormat.add_data_frame()GlobalSummaryFeatImpJsonDatatableFormat.load_index_file()GlobalSummaryFeatImpJsonDatatableFormat.mimeGlobalSummaryFeatImpJsonDatatableFormat.serialize_index_file()GlobalSummaryFeatImpJsonDatatableFormat.validate_data()

GlobalSummaryFeatImpJsonFormatGlobalSummaryFeatImpJsonFormat.DATA_FILE_PREFIXGlobalSummaryFeatImpJsonFormat.DEFAULT_PAGE_SIZEGlobalSummaryFeatImpJsonFormat.KEY_FEATUREGlobalSummaryFeatImpJsonFormat.KEY_FEATURES_PER_PAGEGlobalSummaryFeatImpJsonFormat.KEY_FREQUENCYGlobalSummaryFeatImpJsonFormat.KEY_HIGH_VALUEGlobalSummaryFeatImpJsonFormat.KEY_ORDERGlobalSummaryFeatImpJsonFormat.KEY_SHAPLEYGlobalSummaryFeatImpJsonFormat.from_json_datatable()GlobalSummaryFeatImpJsonFormat.get_page()GlobalSummaryFeatImpJsonFormat.is_paged()GlobalSummaryFeatImpJsonFormat.load_index_file()GlobalSummaryFeatImpJsonFormat.mimeGlobalSummaryFeatImpJsonFormat.serialize_data_file()GlobalSummaryFeatImpJsonFormat.serialize_index_file()GlobalSummaryFeatImpJsonFormat.validate_data()

GrammarOfMliFormatHtmlFormatIceCsvFormatIceDatatableFormatIceJsonDatatableFormatIceJsonDatatableFormat.FILE_Y_FILEIceJsonDatatableFormat.KEY_BINIceJsonDatatableFormat.KEY_BINSIceJsonDatatableFormat.KEY_BINS_NUMCAT_ASPECTIceJsonDatatableFormat.KEY_COL_NAMEIceJsonDatatableFormat.KEY_FEATURE_VALUEIceJsonDatatableFormat.KEY_ICEIceJsonDatatableFormat.KEY_PREDICTIONIceJsonDatatableFormat.add_data_frame()IceJsonDatatableFormat.get_local_explanation()IceJsonDatatableFormat.is_on_demand()IceJsonDatatableFormat.load_index_file()IceJsonDatatableFormat.merge_format()IceJsonDatatableFormat.mimeIceJsonDatatableFormat.mli_ice_explanation_to_json()IceJsonDatatableFormat.serialize_index_file()IceJsonDatatableFormat.serialize_on_demand_index_file()IceJsonDatatableFormat.validate_data()

LlmHeatmapLeaderboardJSonFormatLlmLeaderboardJSonFormatLocalDtJSonFormatLocalDtJSonFormat.dt_path_to_node_key()LocalDtJSonFormat.dt_set_tree_path()LocalDtJSonFormat.get_local_explanation()LocalDtJSonFormat.is_on_demand()LocalDtJSonFormat.mimeLocalDtJSonFormat.serialize_index_file()LocalDtJSonFormat.serialize_on_demand_index_file()LocalDtJSonFormat.validate_data()

LocalFeatImpDatatableFormatLocalFeatImpJSonDatatableFormatLocalFeatImpJSonFormatLocalFeatImpJSonFormat.KEY_YLocalFeatImpJSonFormat.is_on_demand()LocalFeatImpJSonFormat.load_index_file()LocalFeatImpJSonFormat.merge_local_and_global_page()LocalFeatImpJSonFormat.mimeLocalFeatImpJSonFormat.serialize_index_file()LocalFeatImpJSonFormat.sort_data()LocalFeatImpJSonFormat.validate_data()

LocalFeatImpWithYhatsJSonDatatableFormatLocalNlpLocoJSonFormatLocalOnDemandHtmlFormatLocalOnDemandTextFormatLocalSummaryFeatImplJSonFormatLocalSummaryFeatImplJSonFormat.is_on_demand()LocalSummaryFeatImplJSonFormat.is_paged()LocalSummaryFeatImplJSonFormat.load_index_file()LocalSummaryFeatImplJSonFormat.mimeLocalSummaryFeatImplJSonFormat.serialize_index_file()LocalSummaryFeatImplJSonFormat.serialize_on_demand_index_file()LocalSummaryFeatImplJSonFormat.validate_data()

MarkdownFormatModelValidationResultArchiveFormatPartialDependenceCsvFormatPartialDependenceDatatableFormatPartialDependenceDatatableFormat.COL_BIN_VALUEPartialDependenceDatatableFormat.COL_F_LTYPEPartialDependenceDatatableFormat.COL_F_NAMEPartialDependenceDatatableFormat.COL_IS_OORPartialDependenceDatatableFormat.COL_MEANPartialDependenceDatatableFormat.COL_SDPartialDependenceDatatableFormat.COL_SEMPartialDependenceDatatableFormat.mimePartialDependenceDatatableFormat.validate_data()

PartialDependenceJSonFormatPartialDependenceJSonFormat.KEY_BINPartialDependenceJSonFormat.KEY_FREQUENCYPartialDependenceJSonFormat.KEY_OORPartialDependenceJSonFormat.KEY_PDPartialDependenceJSonFormat.KEY_SDPartialDependenceJSonFormat.KEY_XPartialDependenceJSonFormat.get_bins()PartialDependenceJSonFormat.get_numcat_aspects()PartialDependenceJSonFormat.get_numcat_missing_aspect()PartialDependenceJSonFormat.load_index_file()PartialDependenceJSonFormat.merge_format()PartialDependenceJSonFormat.mimePartialDependenceJSonFormat.serialize_index_file()PartialDependenceJSonFormat.set_merge_status()PartialDependenceJSonFormat.validate_data()

SaTextFormatTextCustomExplanationFormatTextCustomExplanationFormat.FILE_IS_ON_DEMANDTextCustomExplanationFormat.FILTER_CLASSTextCustomExplanationFormat.FILTER_FEATURETextCustomExplanationFormat.FILTER_NUMCATTextCustomExplanationFormat.add_data()TextCustomExplanationFormat.add_file()TextCustomExplanationFormat.get_data()TextCustomExplanationFormat.is_on_demand()TextCustomExplanationFormat.mimeTextCustomExplanationFormat.set_index_commons()TextCustomExplanationFormat.set_on_demand()TextCustomExplanationFormat.update_index_file()

TextFormatWorkDirArchiveZipFormatget_custom_explanation_formats()

- h2o_sonar.lib.api.interpretations module

ExplainerJobExplainerJob.KEY_CHILD_KEYSExplainerJob.KEY_CREATEDExplainerJob.KEY_DURATIONExplainerJob.KEY_ERRORExplainerJob.KEY_EXPLAINER_DESCRIPTORExplainerJob.KEY_JOB_LOCATIONExplainerJob.KEY_KEYExplainerJob.KEY_MESSAGEExplainerJob.KEY_PROGRESSExplainerJob.KEY_RESULT_DESCRIPTORExplainerJob.KEY_STATUSExplainerJob.evaluator_id()ExplainerJob.explainer_id()ExplainerJob.from_dict()ExplainerJob.is_finished()ExplainerJob.success()ExplainerJob.tick()ExplainerJob.to_dict()

HtmlInterpretationFormatHtmlInterpretationFormat.ContextHtmlInterpretationFormat.KEYWORD_ID_2_NAMEHtmlInterpretationFormat.html_footer()HtmlInterpretationFormat.html_h2o_sonar_pitch()HtmlInterpretationFormat.html_head()HtmlInterpretationFormat.html_safe_str_field()HtmlInterpretationFormat.html_svg_h2oai_logo()HtmlInterpretationFormat.to_html()

HtmlInterpretationsFormatInterpretationInterpretation.KEY_ALL_EXPLAINERSInterpretation.KEY_CREATEDInterpretation.KEY_DATASETInterpretation.KEY_ERRORInterpretation.KEY_EXECUTED_EXPLAINERSInterpretation.KEY_EXPLAINERSInterpretation.KEY_E_PARAMSInterpretation.KEY_INCOMPATIBLE_EXPLAINERSInterpretation.KEY_INCOMPATIBLE_EXPLAINERS_DSInterpretation.KEY_INSIGHTSInterpretation.KEY_INTERPRETATION_LOCATIONInterpretation.KEY_I_KEYInterpretation.KEY_I_PARAMSInterpretation.KEY_MODELInterpretation.KEY_MODELSInterpretation.KEY_OVERALL_RESULTInterpretation.KEY_PROBLEMSInterpretation.KEY_PROGRESSInterpretation.KEY_PROGRESS_MESSAGEInterpretation.KEY_RESULTInterpretation.KEY_RESULTS_LOCATIONInterpretation.KEY_SCHEDULED_EXPLAINERSInterpretation.KEY_STATUSInterpretation.KEY_TARGET_COLInterpretation.KEY_TESTSETInterpretation.KEY_VALIDSETInterpretation.dict_to_digest()Interpretation.get_all_explainer_ids()Interpretation.get_explainer_ids_by_status()Interpretation.get_explainer_insights()Interpretation.get_explainer_jobs_by_status()Interpretation.get_explainer_problems()Interpretation.get_explainer_result()Interpretation.get_explainer_result_metadata()Interpretation.get_explanation_file_path()Interpretation.get_failed_explainer_ids()Interpretation.get_finished_explainer_ids()Interpretation.get_incompatible_explainer_ids()Interpretation.get_insights()Interpretation.get_jobs_for_evaluator_id()Interpretation.get_jobs_for_explainer_id()Interpretation.get_model_insights()Interpretation.get_model_problems()Interpretation.get_problems_by_severity()Interpretation.get_scheduled_explainer_ids()Interpretation.get_successful_explainer_ids()Interpretation.is_evaluator_failed()Interpretation.is_evaluator_finished()Interpretation.is_evaluator_scheduled()Interpretation.is_evaluator_successful()Interpretation.is_explainer_failed()Interpretation.is_explainer_finished()Interpretation.is_explainer_scheduled()Interpretation.is_explainer_successful()Interpretation.load()Interpretation.load_from_json()Interpretation.register_explainer_result()Interpretation.set_progress()Interpretation.to_dict()Interpretation.to_html()Interpretation.to_html_4_pdf()Interpretation.to_json()Interpretation.to_pdf()Interpretation.update_overall_result()Interpretation.validate_and_normalize_params()

InterpretationResultInterpretationResult.get_evaluator_job()InterpretationResult.get_evaluator_jobs()InterpretationResult.get_explainer_job()InterpretationResult.get_explainer_jobs()InterpretationResult.get_html_report_location()InterpretationResult.get_interpretation_dir_location()InterpretationResult.get_interpretations_html_index_location()InterpretationResult.get_json_report_location()InterpretationResult.get_pdf_report_location()InterpretationResult.get_progress_location()InterpretationResult.get_results_dir_location()InterpretationResult.make_zip_archive()InterpretationResult.remove_duplicate_insights()InterpretationResult.to_dict()InterpretationResult.to_json()

InterpretationsOverallResultPdfInterpretationFormat

- h2o_sonar.lib.api.judges module

- h2o_sonar.lib.api.models module

DriverlessAiModelDriverlessAiRestServerModelExplainableLlmModelExplainableLlmModel.KEY_CONNECTIONExplainableLlmModel.KEY_H2OGPTE_STATSExplainableLlmModel.KEY_H2OGPTE_VISION_MExplainableLlmModel.KEY_KEYExplainableLlmModel.KEY_LLM_MODEL_METAExplainableLlmModel.KEY_LLM_MODEL_NAMEExplainableLlmModel.KEY_MODEL_CFGExplainableLlmModel.KEY_MODEL_TYPEExplainableLlmModel.KEY_NAMEExplainableLlmModel.KEY_STATS_DURATIONExplainableLlmModel.KEY_STATS_FAILUREExplainableLlmModel.KEY_STATS_RETRYExplainableLlmModel.KEY_STATS_SUCCESSExplainableLlmModel.KEY_STATS_TIMEOUTExplainableLlmModel.clone()ExplainableLlmModel.from_dict()ExplainableLlmModel.to_dict()ExplainableLlmModel.to_json()

ExplainableModelExplainableModel.fit()ExplainableModel.has_transformed_modelExplainableModel.load()ExplainableModel.metaExplainableModel.predict()ExplainableModel.predict_datatable()ExplainableModel.predict_pandas()ExplainableModel.save()ExplainableModel.shapley_values()ExplainableModel.to_dict()ExplainableModel.to_json()ExplainableModel.transformed_model

ExplainableModelHandleExplainableModelMetaExplainableModelMeta.default_feature_importances()ExplainableModelMeta.feature_importancesExplainableModelMeta.features_metadataExplainableModelMeta.get_model_type()ExplainableModelMeta.has_shapley_valuesExplainableModelMeta.has_text_transformersExplainableModelMeta.is_constantExplainableModelMeta.num_labelsExplainableModelMeta.positive_label_of_interestExplainableModelMeta.to_dict()ExplainableModelMeta.to_json()ExplainableModelMeta.transformed_featuresExplainableModelMeta.used_features

ExplainableModelTypeExplainableModelType.amazon_bedrock_ragExplainableModelType.azure_openai_llmExplainableModelType.driverless_aiExplainableModelType.driverless_ai_restExplainableModelType.from_connection_type()ExplainableModelType.h2o3ExplainableModelType.h2ogptExplainableModelType.h2ogpteExplainableModelType.h2ogpte_llmExplainableModelType.h2ollmopsExplainableModelType.is_llm()ExplainableModelType.is_rag()ExplainableModelType.mockExplainableModelType.ollamaExplainableModelType.openai_llmExplainableModelType.openai_ragExplainableModelType.scikit_learnExplainableModelType.to_connection_type()ExplainableModelType.unknown

ExplainableRagModelExplainableRagModel.KEY_COLLECTION_IDExplainableRagModel.KEY_COLLECTION_NAMEExplainableRagModel.KEY_CONNECTIONExplainableRagModel.KEY_DOCUMENTSExplainableRagModel.KEY_KEYExplainableRagModel.KEY_LLM_MODEL_METAExplainableRagModel.KEY_LLM_MODEL_NAMEExplainableRagModel.KEY_MODEL_CFGExplainableRagModel.KEY_MODEL_TYPEExplainableRagModel.KEY_NAMEExplainableRagModel.clone()ExplainableRagModel.from_dict()ExplainableRagModel.to_dict()

H2o3ModelModelApiModelVendorOpenAiRagModelPickleFileModelScikitLearnModelTransformedFeaturesModelguess_model_labels()guess_model_used_features()

- h2o_sonar.lib.api.persistences module

ExplainerPersistenceExplainerPersistence.DIR_EXPLAINERExplainerPersistence.DIR_INSIGHTSExplainerPersistence.DIR_LOGExplainerPersistence.DIR_PROBLEMSExplainerPersistence.DIR_WORKExplainerPersistence.EXPLAINER_LOG_PREFIXExplainerPersistence.EXPLAINER_LOG_SUFFIX_ANONExplainerPersistence.FILE_DONE_DONEExplainerPersistence.FILE_DONE_FAILEDExplainerPersistence.FILE_EXPLAINER_PICKLEExplainerPersistence.FILE_EXPLANATIONExplainerPersistence.FILE_INSIGHTSExplainerPersistence.FILE_ON_DEMAND_EXPLANATION_SUFFIXExplainerPersistence.FILE_PROBLEMSExplainerPersistence.FILE_RESULT_DESCRIPTORExplainerPersistence.explainer_idExplainerPersistence.explainer_job_keyExplainerPersistence.get_dirs_for_explainer_id()ExplainerPersistence.get_evaluator_working_file()ExplainerPersistence.get_explainer_ann_log_file()ExplainerPersistence.get_explainer_ann_log_path()ExplainerPersistence.get_explainer_dir()ExplainerPersistence.get_explainer_dir_archive()ExplainerPersistence.get_explainer_insights_dir()ExplainerPersistence.get_explainer_insights_file()ExplainerPersistence.get_explainer_log_dir()ExplainerPersistence.get_explainer_log_file()ExplainerPersistence.get_explainer_log_path()ExplainerPersistence.get_explainer_problems_dir()ExplainerPersistence.get_explainer_problems_file()ExplainerPersistence.get_explainer_working_dir()ExplainerPersistence.get_explainer_working_file()ExplainerPersistence.get_explanation_dir_path()ExplainerPersistence.get_explanation_file_path()ExplainerPersistence.get_explanation_meta_path()ExplainerPersistence.get_key_for_explainer_dir()ExplainerPersistence.get_locators_for_explainer_id()ExplainerPersistence.get_relative_path()ExplainerPersistence.get_result_descriptor_file_path()ExplainerPersistence.load_insights()ExplainerPersistence.load_problems()ExplainerPersistence.load_result_descriptor()ExplainerPersistence.make_dir()ExplainerPersistence.make_explainer_dir()ExplainerPersistence.make_explainer_insights_dir()ExplainerPersistence.make_explainer_log_dir()ExplainerPersistence.make_explainer_problems_dir()ExplainerPersistence.make_explainer_sandbox()ExplainerPersistence.make_explainer_working_dir()ExplainerPersistence.makedirs()ExplainerPersistence.resolve_mli_path()ExplainerPersistence.rm_explainer_dir()ExplainerPersistence.save_insights()ExplainerPersistence.save_json()ExplainerPersistence.save_problems()ExplainerPersistence.username

FilesystemPersistenceFilesystemPersistence.copy_file()FilesystemPersistence.delete()FilesystemPersistence.delete_dir_contents()FilesystemPersistence.delete_file()FilesystemPersistence.delete_tree()FilesystemPersistence.exists()FilesystemPersistence.flush_dir_for_file()FilesystemPersistence.get_default_cwl()FilesystemPersistence.getcwl()FilesystemPersistence.is_dir()FilesystemPersistence.is_file()FilesystemPersistence.list_dir()FilesystemPersistence.list_files_by_wildcard()FilesystemPersistence.load()FilesystemPersistence.load_json()FilesystemPersistence.make_dir()FilesystemPersistence.make_dir_zip_archive()FilesystemPersistence.save()FilesystemPersistence.save_json()FilesystemPersistence.touch()FilesystemPersistence.typeFilesystemPersistence.update()

InMemoryPersistenceInMemoryPersistence.DIRInMemoryPersistence.DirectoryInMemoryPersistence.copy_file()InMemoryPersistence.delete()InMemoryPersistence.delete_dir_contents()InMemoryPersistence.delete_file()InMemoryPersistence.delete_tree()InMemoryPersistence.exists()InMemoryPersistence.get_default_cwl()InMemoryPersistence.getcwl()InMemoryPersistence.is_dir()InMemoryPersistence.is_file()InMemoryPersistence.list_dir()InMemoryPersistence.list_files_by_wildcard()InMemoryPersistence.load()InMemoryPersistence.load_json()InMemoryPersistence.make_dir()InMemoryPersistence.make_dir_zip_archive()InMemoryPersistence.save()InMemoryPersistence.save_json()InMemoryPersistence.touch()InMemoryPersistence.type

InterpretationPersistenceInterpretationPersistence.DIR_AD_HOC_EXPLANATIONInterpretationPersistence.DIR_AUTOML_EXPERIMENTInterpretationPersistence.DIR_MLI_EXPERIMENTInterpretationPersistence.DIR_MLI_TS_EXPERIMENTInterpretationPersistence.FILE_COMMON_PARAMSInterpretationPersistence.FILE_EXPERIMENT_ID_COLSInterpretationPersistence.FILE_EXPERIMENT_IMAGEInterpretationPersistence.FILE_EXPERIMENT_TSInterpretationPersistence.FILE_H2O_SONAR_HTMLInterpretationPersistence.FILE_INTERPRETATION_HTMLInterpretationPersistence.FILE_INTERPRETATION_HTML_4_PDFInterpretationPersistence.FILE_INTERPRETATION_JSONInterpretationPersistence.FILE_INTERPRETATION_PDFInterpretationPersistence.FILE_MLI_EXPERIMENT_LOGInterpretationPersistence.FILE_PREFIX_DATASETInterpretationPersistence.FILE_PROGRESS_JSONInterpretationPersistence.KEY_E_PARAMSInterpretationPersistence.KEY_RESULTInterpretationPersistence.ad_hoc_job_keyInterpretationPersistence.base_dirInterpretationPersistence.create_dataset_path()InterpretationPersistence.data_dirInterpretationPersistence.get_ad_hoc_mli_dir_name()InterpretationPersistence.get_async_log_file_name()InterpretationPersistence.get_base_dir()InterpretationPersistence.get_base_dir_file()InterpretationPersistence.get_experiment_id_cols_path()InterpretationPersistence.get_html_4_pdf_path()InterpretationPersistence.get_html_path()InterpretationPersistence.get_json_path()InterpretationPersistence.get_mli_dir_name()InterpretationPersistence.get_pdf_path()InterpretationPersistence.is_common_params()InterpretationPersistence.is_safe_name()InterpretationPersistence.list_interpretations()InterpretationPersistence.load_common_params()InterpretationPersistence.load_explainers_params()InterpretationPersistence.load_is_image_experiment()InterpretationPersistence.load_is_timeseries_experiment()InterpretationPersistence.load_message_entity()InterpretationPersistence.make_base_dir()InterpretationPersistence.make_dir_zip_archive()InterpretationPersistence.make_interpretation_sandbox()InterpretationPersistence.make_tmp_dir()InterpretationPersistence.mli_keyInterpretationPersistence.resolve_model_path()InterpretationPersistence.rm_base_dir()InterpretationPersistence.rm_dir()InterpretationPersistence.save_as_html()InterpretationPersistence.save_as_json()InterpretationPersistence.save_as_pdf()InterpretationPersistence.save_common_params()InterpretationPersistence.save_experiment_type_hints()InterpretationPersistence.save_message_entity()InterpretationPersistence.tmp_dirInterpretationPersistence.to_alphanum_name()InterpretationPersistence.to_server_file_path()InterpretationPersistence.to_server_path()InterpretationPersistence.user_dir

JsonPersistableExplanationsNanEncoderPersistencePersistence.PREFIX_INTERNAL_STOREPersistence.check_key()Persistence.copy_file()Persistence.delete()Persistence.delete_dir_contents()Persistence.delete_file()Persistence.delete_temp_dir()Persistence.delete_tree()Persistence.exists()Persistence.flush_dir_for_file()Persistence.getcwl()Persistence.is_binary_file()Persistence.is_dir()Persistence.is_dir_or_file()Persistence.is_file()Persistence.key_folder()Persistence.list_dir()Persistence.list_files_by_wildcard()Persistence.load()Persistence.load_json()Persistence.make_dir()Persistence.make_dir_zip_archive()Persistence.make_key()Persistence.make_temp_dir()Persistence.make_temp_file()Persistence.path_to_internal()Persistence.safe_name()Persistence.save()Persistence.touch()Persistence.typePersistence.update()

PersistenceApiPersistenceDataTypePersistenceTypeRobustEncoder

- h2o_sonar.lib.api.plots module

- h2o_sonar.lib.api.problems module

AVIDProblemCodeAVIDProblemCode.E0100_BIASAVIDProblemCode.E0200_EXPLAINABILITYAVIDProblemCode.E0300_TOXICITYAVIDProblemCode.E0400_MISINFORMATIONAVIDProblemCode.P0100_DATAAVIDProblemCode.P0200_MODELAVIDProblemCode.P0300_PRIVACYAVIDProblemCode.P0400_SAFETYAVIDProblemCode.S0400_MODEL_BYPASSAVIDProblemCode.S0500_EXFILTRATIONAVIDProblemCode.S0600_DATA_POISONING

AVIDProblemCodeTypeProblemAndActionProblemCodeProblemSeverityproblems_for_bool_leaderboard()problems_for_cls_leaderboard()problems_for_heat_leaderboard()

- h2o_sonar.lib.api.results module

- Module contents

- h2o_sonar.lib.container package

- Submodules

- h2o_sonar.lib.container.explainer_container module

AsyncLocalContainerContainerRegistryDependencyTreeNodeExplainerContainerExplainerContainer.TYPE_IDExplainerContainer.delete_interpretation()ExplainerContainer.explainers_registryExplainerContainer.gc()ExplainerContainer.get_explainer()ExplainerContainer.get_explainer_class()ExplainerContainer.get_explainer_job_keys_by_id()ExplainerContainer.get_explainer_job_statuses()ExplainerContainer.get_explainer_local_result()ExplainerContainer.get_explainer_log_path()ExplainerContainer.get_explainer_metadata()ExplainerContainer.get_explainer_result_path()ExplainerContainer.get_explainer_snapshot_path()ExplainerContainer.get_interpretation_params()ExplainerContainer.get_interpretation_status()ExplainerContainer.hot_deploy_explainer()ExplainerContainer.list_explainers()ExplainerContainer.list_interpretations()ExplainerContainer.load_interpretations()ExplainerContainer.register_configured_explainers()ExplainerContainer.register_explainer()ExplainerContainer.run_interpretation()ExplainerContainer.setup()ExplainerContainer.unregister_explainer()ExplainerContainer.update_explainer_result()

ExplainerExecutorLocalExplainerContainerParallelExplainerExecutorSequentialExplainerExecutorregister_ootb_explainers()

- Module contents

- h2o_sonar.lib.integrations package

- Submodules

- h2o_sonar.lib.integrations.genai module

AmazonBedrockFoundationModelAmazonBedrockFoundationModel.customizations_supportedAmazonBedrockFoundationModel.inference_types_supportedAmazonBedrockFoundationModel.input_modalitiesAmazonBedrockFoundationModel.model_arnAmazonBedrockFoundationModel.model_idAmazonBedrockFoundationModel.model_lifecycle_statusAmazonBedrockFoundationModel.model_nameAmazonBedrockFoundationModel.output_modalitiesAmazonBedrockFoundationModel.provider_nameAmazonBedrockFoundationModel.response_streaming_supported

AmazonBedrockKnowledgeBaseAmazonBedrockRagClientAmazonBedrockRagClient.ES_TEMP_PREFIXAmazonBedrockRagClient.ask_collection()AmazonBedrockRagClient.ask_model()AmazonBedrockRagClient.bedrockAmazonBedrockRagClient.bedrock_agentAmazonBedrockRagClient.bedrock_agent_runtimeAmazonBedrockRagClient.bedrock_runtimeAmazonBedrockRagClient.config_factory()AmazonBedrockRagClient.connectionAmazonBedrockRagClient.create_collection()AmazonBedrockRagClient.get_rag_conf()AmazonBedrockRagClient.iamAmazonBedrockRagClient.is_model_enabled()AmazonBedrockRagClient.list_collections()AmazonBedrockRagClient.list_llm_model_names()AmazonBedrockRagClient.list_llm_models()AmazonBedrockRagClient.opensearchserverlessAmazonBedrockRagClient.purge_collections()AmazonBedrockRagClient.purge_uploaded_docs()AmazonBedrockRagClient.s3AmazonBedrockRagClient.s3_resourceAmazonBedrockRagClient.sts

AwsClientAwsResourceH2oGptLlmClientH2oGpteRagClientH2oGpteRagClient.CFG_CHAT_CONVERSATIONH2oGpteRagClient.CFG_EMBEDDING_MODELH2oGpteRagClient.CFG_LLMH2oGpteRagClient.CFG_LLM_ARGSH2oGpteRagClient.CFG_PRE_PROMPT_QUERYH2oGpteRagClient.CFG_PRE_PROMPT_SUMMARYH2oGpteRagClient.CFG_PROMPT_QUERYH2oGpteRagClient.CFG_PROMPT_SUMMARYH2oGpteRagClient.CFG_PROMPT_TEMPLATE_IDH2oGpteRagClient.CFG_RAG_CONFIGH2oGpteRagClient.CFG_SELF_REFLECTION_CONFIGH2oGpteRagClient.CFG_SYSTEM_PROMPTH2oGpteRagClient.CFG_TEMPERATUREH2oGpteRagClient.CFG_TEXT_CONTEXT_LISTH2oGpteRagClient.CFG_TIMEOUTH2oGpteRagClient.CFG_USE_AGENTH2oGpteRagClient.DEFAULT_AGENT_TIMEOUTH2oGpteRagClient.DEFAULT_TIMEOUTH2oGpteRagClient.MODEL_SPEC_AUTOH2oGpteRagClient.MODEL_SPEC_COLH2oGpteRagClient.MODEL_SPEC_COL_OPT_EH2oGpteRagClient.MODEL_SPEC_COL_OPT_NH2oGpteRagClient.TypedLlmConfigDictH2oGpteRagClient.TypedLlmConfigDict.chat_conversationH2oGpteRagClient.TypedLlmConfigDict.llmH2oGpteRagClient.TypedLlmConfigDict.llm_argsH2oGpteRagClient.TypedLlmConfigDict.pre_prompt_summaryH2oGpteRagClient.TypedLlmConfigDict.prompt_queryH2oGpteRagClient.TypedLlmConfigDict.system_promptH2oGpteRagClient.TypedLlmConfigDict.text_context_listH2oGpteRagClient.TypedLlmConfigDict.timeout

H2oGpteRagClient.TypedRagConfigDictH2oGpteRagClient.TypedRagConfigDict.embedding_modelH2oGpteRagClient.TypedRagConfigDict.llmH2oGpteRagClient.TypedRagConfigDict.llm_argsH2oGpteRagClient.TypedRagConfigDict.pre_prompt_queryH2oGpteRagClient.TypedRagConfigDict.pre_prompt_summaryH2oGpteRagClient.TypedRagConfigDict.prompt_queryH2oGpteRagClient.TypedRagConfigDict.prompt_summaryH2oGpteRagClient.TypedRagConfigDict.prompt_template_idH2oGpteRagClient.TypedRagConfigDict.rag_configH2oGpteRagClient.TypedRagConfigDict.self_reflection_configH2oGpteRagClient.TypedRagConfigDict.system_promptH2oGpteRagClient.TypedRagConfigDict.timeout